Google 文件系统(一)

Oct 31, 2020 22:55 · 10828 words · 22 minute read

摘要

我们设计并实现了 GFS(Google File System),这是用于大型分布式数据密集型应用程序的可扩展的分布式文件系统。它可以在廉价的硬件上运行时提供一定的容错能力,并为大量客户端提供较高的聚合性能。

与以往的分布式文件系统有着共同的目标,我们的设计由对我们当前以及预期的应用程序工作负载和科技环境的观察所驱动,与一些早期的文件系统存在明显差异。这让我们重新审视传统选择,并探索完全不同的设计要点。

这个文件系统成功达到了我们的存储要求。它在 Google 内部作为生产和数据处理的存储平台被广泛部署,用于我们的服务和需要大量数据集的研发。迄今为止最大的集群在上千台机器的数千个磁盘上提供了数百 TB 的存储,并且几百个客户端可以并发访问。

在这篇论文中,我们将介绍旨在支持分布式应用程序的文件系统接口扩展,讨论我们设计的方方面面,并阐述微基准测试和生产的使用情况。

1. 介绍

我们设计并实现了 GFS 来满足快速增长的 Google 的数据处理需求。GFS 与以往的分布式文件系统有着共同的目标,比如性能、扩展性、可靠性和可用性。但是,它的设计由我们当前以及预期的应用程序工作负载和科技环境的观察所驱动,与一些早期的文件系统存在明显差异。这让我们重新审视传统选择,并探索完全不同的设计要点。

首先,组件故障是常态而非意外。文件系统数百甚至上千台廉价的存储机器组成并被数量可观的客户端机器访问。组件的数量和质量实际上保证了某些在任何时候都无法正常运行,某些无法从当前故障中恢复。我们见过各种由程序 bug、操作系统 bug、人为错误导致的问题,磁盘、内存、连接器、网络和电源故障。因此,持续的监控,错误探测,容错,自动恢复必须集成进系统。

其次,文件通常都很大,数 GB 的文件很常见。每个文件通常包含许多应用程序对象比如 web 文档。当我们定期处理包含了数十亿个对象的 TB 级数据集,而且还在快速增长,即使文件系统支持数十亿个几 KB 大小的文件,也很难管理。因此像 I/O 操作和块大小这样的参数需要重新考虑。

再者,大多数文件是直接追加新数据而非覆盖已经存在的数据。文件内的随机写实际上是不存在的。一旦保存完,文件就只被读取了,并且通常只能顺序读。很多数据都有这种特征。有些可能构成数据分析程序扫描的大型仓库,有些可能是正在运行的应用程序持续产生的数据流,有些可能是归档数据,有些可能是一台机器上生成的临时结果,将在另一台上同时或稍后处理。为海量文件赋予这种访问模式,追加成为性能优化和保证原子性的重点,而在客户端中缓存数据失去了吸引力。

第四,一起设计应用程序和文件系统 API 通过增加弹性对整个系统有益。举个例子,我们放松了 GFS 的一致性模型以极大地简化文件系统,而不会给应用程序带来繁重的负担。我们还引入了原子追加操作,这样多个客户端可以并发追加到文件而无需在它们之间进行额外的同步。这些将稍后详细讨论。

多个 GFS 集群当前已经部署好了。最大的那个有超过 1000 个存储节点,超过 300 TB 的磁盘存储,并且数百个不同机器上的客户端在连续不断地对其进行大量访问。

设计概述

2.1 假设

设计一个满足我们需求的文件系统既是挑战又是机遇。之前提到了一些关键的观点,现在详细阐述我们的假设。

- 系统由经常发生故障的廉价组件构成。它必须持续监控和检测自我,容错并尝试从组件故障中快速恢复。

- 系统存储非常多的大文件。我们预期有几百万文件,每个差不多 100 MB 或者更大。GB 级别的文件很常见,应当被高效管理。小文件也必须支持,但是没必要为它们优化。

- 工作负载主要有两种读取方式:大型流读取和小型随机读取。在大型流读取中,单个操作通常读取几百 KB,更常见的是 1 MB 或更多。来自同一客户端的连续操作往往会读取文件的连续区域。小型随机读取通常会按偏移量读取几 KB。注重性能的应用程序经常对小量读进行批量化和排序而不是来来回回读取。

- 工作负载也有大量顺序写入,将数据追加到文件中,通常与读取的大小类似。文件一旦写入就很少再更改了。支持对文件中任意位置的小量写操作,但不必高效。

- 这个系统必须有效地实现多客户端并发追加写同一份文件。我们的文件经常被用作生产者-消费者队列或用于多种合并。上百个生产者同时追加写一份文件。最小同步开销的原子性必不可少。文件可能随时被读写。

- 高带宽比低延迟重要得多。大多数目标应用程序都优先以高速率处理大量数据,很少对单个读写的响应时间有严格要求。

2.2 接口

GFS 提供了类似的文件系统接口,尽管并没有完整地实现像 POSIX 这样标准 API。文件以路径名称标识并在目录中以树状层次结构组织。我们支持创建、删除、打开、关闭、读 和 写 文件这样的常规操作。

此外,GFS 有 快照 和 增量日志 操作。快照以低成本创建文件或路径树的副本。增量记录允许多个客户端同时将数据追加写到同一文件中,同时还保证每个单独客户端追加操作的原子性。这对于实现多路合并结果和生产者-消费者队列非常有用,客户端可以在不带锁的情况下操作。我们发现这种特性的文件对构建大型分布式应用程序具有不可估量的价值。快照和增量日志将在 3.3 和 3.4 节中进一步详细讨论。

2.3 架构

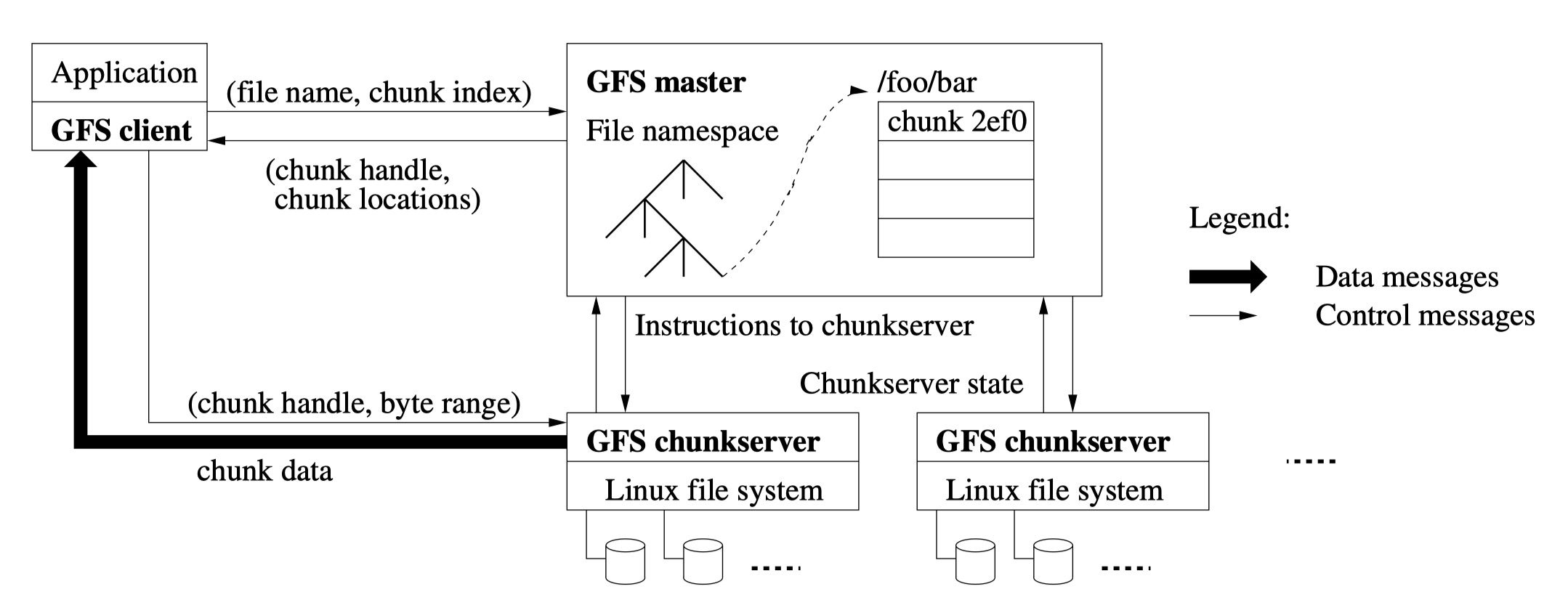

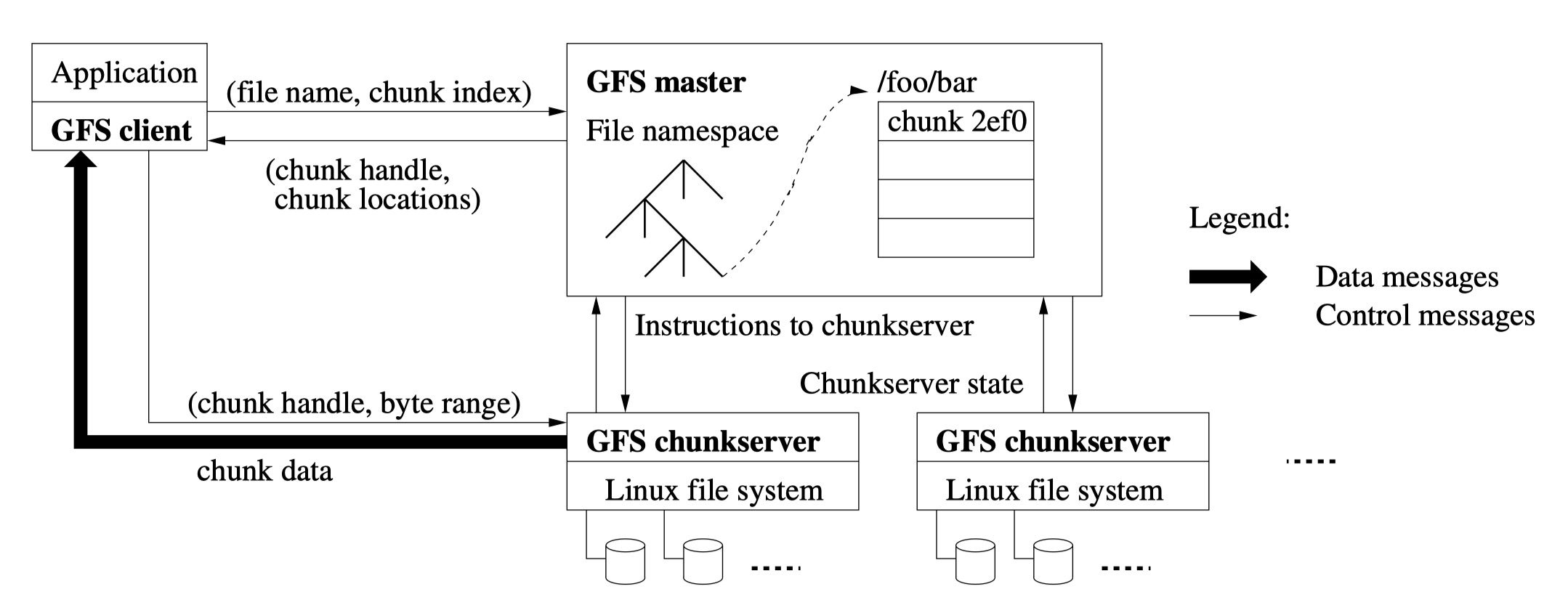

一个 GFS 集群由一个主节点和多个块服务器组成,被多个客户端访问,如图 1 所示。每个节点通常是跑着一个用户级服务器进程的廉价 Linux 机器。在同一台机器上同时运行块服务器和客户端也不是不可以,只要硬件资源允许并且能接受运行不靠谱的应用程序代码而导致的低可靠性。

文件被切成固定大小的块。每个块在创建时会分配一个不可变且全局唯一的 64 位块标识码。块服务器将数据块以 Linux 文件的形式存储在本地磁盘上,在读取或写入时由块标识码和字节范围指定。每个数据块都在多个块服务器上有副本以保证可靠性。默认我们存储 3 副本,用户也可以指定不同的副本级别。

主节点维护所有文件系统的元数据,包括命名空客、访问控制信息、文件与块的映射表和块的当前位置。它还控制整个系统的活动,比如管理块的租赁、孤立块的垃圾回收以及块在服务器之间的迁移。主节点接收每个块服务器的定期心跳包,向其发出指令并收集它们的状态。

GFS 客户端实现了文件系统 API 并与主节点和块服务器通信来读写数据,并由应用程序调用。虽然客户端会与与主节点交互以进行元数据操作,但数据是直接发送到块服务器的。我们也不提供 POSIX API,不涉及 Linux vnode 层。

客户和块服务器都不缓存文件数据。客户端缓存没啥好处,因为大多数应用程序会处理大量数据,数据太多无法缓存。没有缓存,可以消除缓存的一致性问题,从而简化客户端和整个系统。(但是客户端可以缓存元数据。)块服务器也没必要缓存因为块以本地文件的形式存储,Linux 在内存中已经有频繁被访问的数据缓冲了。

2.4 单主节点

单主节点极大地简化了我们的设计并可以全局感知复杂的块分配和复制策略。但是我们必须最小化它在读和写时的参与读,这样就不会成为瓶颈。客户端从不通过主节点来读写文件数据,而是向主节点询问应该与哪个块服务器通信。主节点在有限的时间里缓存信息,并和块服务器通信以断后。

我们参考图 1 来解释下简单的读操作时的交互。首先,使用固定的块大小,客户端将应用程序指定的文件名和字节偏移量转换为文件内的块索引。然后客户端向主节点发送包含文件名和块索引的请求,主节点给出相应的块标识码和副本的位置。客户端使用文件名和块索引作为键来缓存这些信息。

客户端随后向副本之一发送请求,指定该块标识码和字节范围。之后读取相同的块就没必要和主节点通信了,直到缓存过期或者文件被重新打开过。实际上,客户端通常会在同一请求中询问多个块,而主节点也会立即给出相关回复,以较小的代价避免了一些将来的客户端-主节点通信。

2.5 块大小

块大小是核心设计参数之一。我们选择了 64 MB,比通常的文件系统块大很多。每个块副本以纯 Linux 文件的形式存储在块服务器上,仅在需要时才扩展。要避免惰性空间分配由于内部碎片而浪费空间,可能是最大的反对意见。

大的块体积有诸多重要的优点。首先减少了客户端与主节点的交互,因为在相同的块上读写只要开始时一把查询块位置信息的请求。这些开销的节省对工作负载非常重要因为应用程序通常顺序读写大文件。即使小量随机读,客户端可以轻松地缓存 TB 级工作集的所有块位置信息。其次,由于块体积较大,客户端有更大可能性在给定的块上执行操作,因而通过在较长时间内与服务器保持 TCP 长连接来减少了网络开销。再者,也减小了保存在主节点上的元数据体积,这使得我们能够将元数据保留在内存中,又带来了其他好处,我们将在 2.6.1 节中讨论。

另一方面,较大的块体积也有缺点。一个小文件由少量块组成,可能只有一个。如果许多客户端同时访问一个文件,则存储它的块服务器可能成为热门。实际上,这倒不是主要问题,因为我们的应用程序通常会顺序读取大型的多块文件。

但是,当批处理队列系统首次使用 GFS 时,热点确实出现了:一个可执行文件作为单块文件写入 GFS,然后同时在数百台机器上启动。成百上千的并发请求使少数块服务器过载。我们增大副本数并使批处理队列错开应用程序的启动时间来解决此问题。一个潜在的长期解决方案是在这种情况下允许客户端从其他客户端读取数据。

2.6 元数据

主节点存储三种主要的元数据:文件和块的命名空间,文件到块的映射,每个块副本的位置。所有的元数据保存在主节点的内存中。前两种(命名空间和文件到块的映射)还在主节点服务器的本地磁盘上以操作日志的形式存储,并在远程机器上保留副本。使用日志可以让我们简单、可靠地更新主节点状态,避免在主节点崩溃时可能会发生数据不一致。

2.6.1 内存中的数据结构

因为元数据存储在内存中,主节点的操作非常快,而且高效地在定期在后台扫描其整个状态,用于实现块垃圾回收,在块服务器故障时重新复制以及迁移以平衡服务器之间的负载和磁盘空间使用率。4.3 和 4.4 节会进一步讨论。

这种仅仅使用内存的一个潜在的问题是块总数量和整个系统的容量受主节点有多少内存限制。这在实践中也不是特别严重的限制。主节点为每个 64 MB 的块维护少于 64 字节的元数据。大多数块是完全利用的因为大多数文件包含很多块,只有剩下的最后一部分可能存在碎片。同样,文件命名空间数据通常不会超过 64 字节,因为使用前缀压缩使文件名更紧凑。

如果要支持更大的文件系统,相较于将元数据保持在内存中而得到的简洁、可靠性、性能和伸缩性,向主节点添加额外的内存的成本不大。

2.6.2 块位置

主节点不会持久化保存有关哪个块服务器有给定副本的记录。它只在启动的时候向块服务器轮询拉取信息。主节点此后能够保持数据最新是因为它控制所有块的位置并通过定期心跳消息监控块服务器的状态。

我们起初尝试将块的位置信息在主节点持久化保存,但后来发现启动后定期向块服务器请求数据要简单得多。这就解决了集群中的块服务器加入\退出、更名、故障、重启等造成的同步问题。在上百台服务器的集群中,这些情况太常见了。

要理解该设计,我们要意识到块服务器对自己的磁盘存不存在某个块有最终决定权。试图在主节点上维护信息的一致性是没有意义的,因为块服务器上的错误可能导致块的自发性消失(例如磁盘坏了并被禁言),或者运维可能为块服务器更名。

2.6.3 操作日志

操作日志包含关键元数据的更改历史记录。这是 GFS 的核心。不仅是元数据的唯一持久化记录,而且还作为并发操作顺序的逻辑时间线。文件和块,还有他们版本,均由它们创建的逻辑时间唯一标识。

既然操作日志非常关键,我们必须妥善保管,并且在元数据的更改持久化前对客户端透明。否则,即使这些块本身仍存在,我们也要有效地丢弃整个文件系统或最近的客户端操作。因此我们将其复制到多台远程机器上,并在只在相应的日志记录刷到本地和远程磁盘后才相应客户端的操作。主节点在刷新之前将几份日志统一作批处理,从而减小刷新和复制对整个系统吞吐量的影响。

主节点通过重播操作日志来恢复文件系统状态。为了最小化启动时间,我们必须让日志小一点。每当日志增长到超过特定大小时,主节点都会检查其状态,以便可以从本地磁盘加载最新的检查点并重播有限数量的日志记录来进行恢复。检查点在紧凑的 B 树中,这样就可以直接映射到内存中并用于命名空间查找而无需额外的解析,大大加快恢复速度并提高了可用性。

因为构建一个检查点要花一些时间,主节点的内部状态以这样的结构方式组织能够创建新的检查点而不会延迟新的变化。主节点切换至新的日志文件并在一个独立的线程中创建新的检查点。新的检查点包含了切换前的所有变化,如果集群规模只有小几百万份文件一分钟内就能创建好。完成后会被写入本地和远程磁盘。

恢复仅需要最新的完整的检查点和后续日志文件。更老的检查点和日志文件会被自由地删除,但是我们会保留一点以容灾。有问题的检查点不会有影响因为恢复的代码会跳过不完整的检查点。

2.7 一致性模型

GFS 有宽松的一致性模型,很好地支持我们的分布式应用程序,但是实现起来相对简单有效。

2.7.1 GFS 的保证

文件命名空间的变化(例如创建)是原子性的。它们仅仅由主节点处理:命名空间的锁保证了原子性和正确性(4.1 节);主节点的操作日志为这些操作定义了全局次序。

数据变化后文件区域的状态取决于变化的类型,是否成功以及是否存在并发变化。图 1 概括了结论。如果所有客户端始终看到相同的数据,则文件区域是一致的,无论它们从哪个副本读取。如果文件数据改变,在区域定义后,如果该区域是一致的,那么客户端将看到改变的全部内容。并发的成功更改使该区域不确定但保持一致:所有客户端看到相同的数据,但可能无法反映任何更改的内容。通常由多个更改混合而成。一次失败的更改会导致区域不一致:不同的客户端可能在不同的时间看到不同的数据。我们在下面讲一下应用程序如何区分已定义的区域和未定义的区域。应用程序无需进一步区分不同类型的未定义区域。

数据变更可能是写入或记录追加。写操作导致数据以应用程序指定的文件偏移量写入。记录追加使数据或记录即使在存在并发更改的情况下也至少原子性地追加一次,但以一个 GFS 选择的偏移量(3.3 节)。(相反,“常规”追加只是客户端认为其为文件末尾的偏移量的写入。)偏移量被返回给客户端,并标记上包含记录的已定义区域的开始。另外,GFS 可能会在其间填充数据或记录重复项。它们占据被认为是不一致的区域,和用户数据的数量相比通常相形见绌。

在一串成功的变更后,可以确保改变的文件区域包含了最后一次更改所写入的数据。GFS 通过以下方式实现:(a)在所有副本上以同样的顺序对块应用更改(3.1 节),(b)使用块版本号来检测任何因为块服务器宕机导致未变更而过期的副本(4.5 节)。老的副本将永不参与变更,也不会让客户端询问主块的位置。它们会被当作垃圾尽早地收集起来。

由于客户端会缓存块的位置,可能会在信息被刷新前从过期的副本中读取。这个窗口受限于缓存条目的超时和下一次打开文件的时间,会清掉该文件在缓存中的所有块信息。此外由于大多数文件都是追加的,一个陈旧的副本通常会返回一个过早结束的块而不是过期的信息。当 reader 尝试重新联系主节点时,将马上获取到当前的块位置。

在变更成功后的很长一段时间里,组件故障肯定会毁坏数据。GFS 通过主节点和所有块服务器之间定期心跳来识别失败的块服务器,通过校验和(5.2 节)来检测数据损坏。一旦出现问题,数据会尽快从有效的副本中恢复(4.3 节)。只有在 GFS 做出反应前所有副本都丢失了,一个数据块才会不可逆的丢失,通常在几分钟以内。即使这样,它只是变得不可用,而不是彻底损坏:应用程序收到的是明显的错误而不是损坏的数据。

2.7.2 对应用程序的影响

GFS 应用程序可以用一些简单的技术来适应宽松的一致性模型,这些技术也用于其他目的:追加而非覆盖,检查点,以及编写自验证、自识别的记录。

实际上,我们所有的应用程序都是通过追加而不是覆写来改变文件。一个典型的案例,一个 writer 从头到尾生成一个文件。在写完所有数据后,它将原子地为文件重命名,或定期检查有多少数据被成功写入。检查点可能包括应用程序级别的校验和。reader 只验证和处理文件区域直到最后一个检查点,已知该区域处于已定义状态。抛开一致性和并发的问题,这种方法很有用。与随机写相比,追加高效得多,对应用程序故障的弹性也更强。检查点允许 writer 以增量的方式重新开始,而 reader 不至于处理不完整的数据。

另一个典型案例,许多 writer 并发追加至一个文件中来合并结果或作为一个生产者-消费者队列。记录追加的 append-at-least-once 语义保留了每个 writer 的输出。reader 处理随之而来偶尔出现的重复填充的状况。每一条由 writer 准备的记录都包含额外的信息,比如校验和,这样就可以验证它是否有效了。reader 可以使用校验和来识别和丢弃额外的填充物和记录碎片。如果它不能容忍偶尔的重复(例如,如果它们会触发非幂等操作),可以在记录中使用唯一的标识符将它们剔除,这些标识符通常用于命名相应的应用程序实体,比如 web 文档。这些用于记录 I/O 的功能(除了重复剔除)都在我们的共享代码库中,并应用于 Google 的其他文件接口实现。有了这些,相同的记录序列和罕见的重复总是能传递给读取记录的 reader。

Abstract

We have designed and implemented the Google File System, a scalable distributed file system for large distributed data-intensive applications. It provides fault tolerance while running on inexpensive commodity hardware, and it delivers high aggregate performance to a large number of clients.

While sharing many of the same goals as previous distributed file systems, our design has been driven by observations of our application workloads and technological environment, both current and anticipated, that reflect a marked departure from some earlier file system assumptions. This has led us to reexamine traditional choices and explore radically different design points.

The file system has successfully met our storage needs. It is widely deployed with Google as the storage platform for the generation and processing of data used by our service as well as research and development efforts that require large data sets. The largest cluster to date provides hundreds of terabytes of storage across thousands of disks on over a thousand machines, and it is concurrently accessed by hundreds of clients.

In this paper, we present file system interface extensions designed to support distributed applications, discuss many aspects of our design, and report measurements from both micro-benchmarks and read world use.

1. Introduction

We have designed and implemented the Google File System (GFS) to meet the rapidly growing demands of Google’s data processing needs. GFS shares many of the same goals as previous distributed file systems such as performance, scalability, reliability, and availability. However, its design has been driven by key observations of our application workloads and technological environments, both current and anticipated, that reflects a marked departure from some earlier file system design assumptions. We have reexamined traditional choices and explored radically different points in the design space.

First, component failures are both the norm rather than the exception. The file system consists of hundreds or even thousands of storage machines built from inexpensive commodity parts and is accessed by a comparable number of client machines. The quantity and quality of the components virtually guarantee that some are not functional at any given time and some will not recover from their current failures. We have seen problems caused by application bugs, operating system bugs, human errors, and the failures of disks, memory, connectors, networking, and power supplies. Therefore, constant monitoring, error detection, fault tolerance, and automatic recovery must be integral to the system.

Second, files are huge by traditional standards, Multi-GB files are common. Each file typically contains many application objects such as web documents. When we are regularly working with fast growing data sets of many TBs comprising billions of objects, it is unwieldy to manage billions of approximately KB-sized files even when the file system could supported it. As a result, design assumptions and parameters such as I/O operation and block sizes have to be revisited.

Third, most file are mutated by appending new data rather than overwriting existing data. Random writes within a file are practically non-existent. Once written, the files are only read, and often only sequentially. A variety of data share these characteristics. Some may constitute large repositories that data analysis program scan through. Some may be data streams continuously generated by running applications. Some may be archival data. Some may be intermediate results produced on one machine and processed on another, whether simultaneously or later in time. Given this access pattern on huge files, appending becomes the focus of performance optimization and atomicity guarantees, while caching data blocks in the client loses its appeal.

Fourth, co-designing the applications and the file system API benefits the overall system by increasing our flexibility. For example, we have relaxed GFS’s consistency model to vastly simplify the file system without imposing an onerous burden on the applications. We have also introduced an atomic append operation so that multiple clients can append concurrently to a file without extra synchronization between them. These will be discussed in more details later in the paper.

Multiple GFS clusters are currently deployed for different purposes. The largest ones have over 1000 storage nodes, over 300 TB of disk storage, and are heavily accessed by hundreds of clients on distinct machines on a continuous basis.

2. Design Overview

2.1 Assumptions

In designing a file system for our needs, we have been guided by assumptions that offer both challenges and opportunities. We alluded to some key observations earlier and now lay out our assumptions in more details.

- The System is built from many inexpensive commodity components that often fail. It must constantly monitor itself and detect, tolerate, and recover promptly from component failures on a routine basis.

- The system stores a modest number of large files. We expect a few million file, each typically 100 MB or large in size. Multi-GB files are common case and should be managed efficiently. Small files must be supported, but we need not optimized for them.

- The workloads primarily consist of two kinds of reads: large streaming reads and small random reads. In large streaming reads, individual operations typically read hundreds of KBs, more commonly 1 MB or more. Successive operations from the same client often read through a contiguous region of a file. A small random read typically reads a few KBs at some arbitrary offset. Performance-conscious applications often batch and sort their small reads to advance steadily through the file rather than go back and forth.

- The workloads also have many large, sequential writes that append data to files. Typical operation sizes are similar to those for reads. Once written, files are seldom modified again. Small writes at arbitrary positions in a file are supported but do not have to be efficient.

- The system must efficiently implement well-defined semantics for multiple clients that concurrently append to the same file. Our files are often used as producer-consumer queues or for many-way merging. Hundreds of producers, running one per machine, will concurrently append to a file. Atomicity with minimal synchronization overhead is essential. The file may be read later, or a consumer may be reading through the file simultaneously.

- High sustained bandwidth is more important than low latency. Most our target applications place a premium on processing data in bulk at a high rate, while few have stringent response time requirements for an individual read or write.

2.2 Interface

GFS provides a familiar file system interface, though it does not implement a standard API such as POSIX. Files are organized hierarchically in directories and identified by path names. We support the usual operations to create, delete, open, close, read, and write files.

Moreover, GFS has snapshot and record append operations. Snapshot creates a copy of a file or a directory tree at low cost. Record append allows multiple clients to append data to the same file concurrently while guaranteeing the atomicity of each individual client’s append. It is useful for implementing multi-way merge results and producer-consumer queues that many clients can simultaneously append to without additional locking. We have found these types of files to be invaluable in building large distributed applications. Snapshot and record append are discussed further in Sections 3.4 and 3.3 respectively.

2.3 Architecture

A GFS cluster consists of a single master and multiple chunkservers and is accessed by multiple clients, as shown in Figure 1. Each of these is typically a commodity Linux machine running a user-level server process. It is easy to run both a chunkserver and a client on the same machine, as long as machine resources permit and the lower reliability caused by running possibly flaky application code is acceptable.

Files are divided into fixed-size chunks. Each chunk is identified by an immutable and globally unique 64 bit chunk handle assigned by the master at the time of chunk creation. Chunkservers store chunks on local disks as Linux files and read or write chunk data specified by a chunk handle and byte range. For reliability, each chunk is replicated on multiple chunkservers. By default, we store three replicas, though users can designate different replication levels for different regions of the file namespaces.

The master maintains all file system metadata. This includes the namespace, access control information, the mapping from files to chunks, and the current locations of chunks. It also controls system-wide activities such as chunk lease management, garbage collection of orphaned chunks, and chunk migration between chunkservers. The master periodically communicates with each chunkserver in HeartBeat messages to give it instructions and collect its state.

GFS client code linked into each application implements the file system API and communicates with the master and chunkservers to read and write data on behalf of the application. Clients interact with the master for metadata operations, but all data-bearing communications goes directly to the chunkservers. We do not provide the POSIX API and therefore need not hook into the Linux vnode layer.

Neither the client not the chunkserver caches file data. Clients caches offer little benefit because most applications stream through huge files or have working sets too large to be cached. Not having them simplified the client and the overall system by eliminating cache coherence issues.(Clients do cache metadata, however.) Chunkservers need not cache file data because chunks are stored as local files and so Linux’s buffer cache already keeps frequently accessed data in memory.

2.4 Single master

Having a single master vastly simplified our design and enables the master to make sophisticated chunk placement and replication decisions using global knowledge. However, we must minimize its involvement in reads and writes so that it does not become a bottleneck. Clients never read and write file data through the master. Instead, a client asks master which chunkserver it should contact. It caches this information for a limited time and interacts with the chunkservers directly for many subsequent operations.

Let us explain the interactions for a simple read with reference to Figure 1. First, using the fixed chunk size, the client translates the file name and byte offset specified by the application into a chunk index within the file. Then, it sends the master a request containing the file name and chunk index. The master replies with the corresponding chunk handle and locations of the replicas. The client caches this information using the file name and chunk index as the key.

The client then sends a request to one of the replicas, most likely the closet one. The request specified the chunk handle and a byte range within that chunk. Further reads of the same chunk require no more client-master interaction until the cached information expires or the file is reopened. In fact, the client typically asks for multiple chunks in the same request and the master can also include the information for chunks immediately following those requested. This extra information sidesteps several future client-master interactions at practically extra cost.

2.5 Chunk Size

Chunk size is one of the key design parameters. We have chosen 64 MB, which is much larger than typical file system block sizes. Each chunk replica is stored as a plain Linux file on a chunkserver and is extended only as needed. Lazy space allocation avoids wasting space due to internal fragmentation, perhaps the greatest objection against suck a large chunk size.

A large chunk size offers several important advantages. First, in reduces clients’ need to interact with the master because reads and writes on the same chunk require only one initial request to the master for chunk location information. The reduction is especially significant for our workloads because applications mostly read and write large files sequentially. Even for small random reads, the client can comfortably cache all the chunk location information for a multi-TB working set. Second, since on a large chunk, a client is more likely to perform may operations on a given chunk, it can reduce network overhead by keeping a persistent TCP connection to the chunkserver over an extended period of time. Third, it reduces the size of the metadata stored on the master. This allows us to keep the metadata in memory, which in turn brings other advantages that we will discuss in Section 2.6.1.

On the other hand, a large chunk size, even with lazy space allocation, has its disadvantages. A small file consists of a small number of chunks, perhaps just one. The chunkservers storing those chunks may become hot spots if many clients are accessing the same file. In practice, hot spots have not been a major issue because our applications mostly read large multi-chunks files sequentially.

However, hot spots did develop when GFS was first used by a batch-queue system: an executable was written to GFS as a single-chunk file and then started on hundreds of machines at the same time. The few chunkservers storing this executable were overloaded by hundreds of simultaneous requests. We fixed this problem by storing such executables with a higher replication factor and by making the batch-queue system stagger application start times. A potential long-term solution is to allow clients to read data from other clients in such situations.

2.6 Metadata

The master stores three major types of metadata: the file and chunk namespaces, the mapping from files to chunks, and the locations of each chunk’s replicas. All metadata is kept in the master’s memory. The first two types (namespaces and file-to-chunk mapping) are also kept persistent by logging mutations to an operation log stored on the master’s local disk and replicated on remote machines. Using a log allows us to update the master state simply, reliably, and without risking inconsistencies in the event of a master crash.

2.6.1 In-Memory Data Structures

Since metadata is stored in memory, master operations are fast. Furthermore, it is easy and efficient for the master to periodically scan through its entire state in the background. This periodic scanning is used to implement chunk garbage collection, re-replication in the presence of chunkserver failures, and chunk migration to balance load and disk space usage across chunkservers. Sections 4.3 and 4.4 will discuss these activities further.

One potential concern for this memory-only approach is that the number of chunks and hence the capacity of the whole system is limited by how much memory the master has. This is not a serious limitation in practice. The master maintains less than 64 bytes of metadata for each 64 MB chunk. Most chunks are full because most files contain many chunks, only the last of which may be partially filled. Similarly, the file namespace data typically requires less then 64 bytes per file because it stores file names compactly using prefix compression.

If necessary to support even larger file systems, the cost of adding extra memory to the master is a small price to pay for the simplicity, reliability, performance, and flexibility we gain by storing the metadata in memory.

2.6.2 Chunk Locations

The master does not keep a persistent record of which chunkservers have a replica of a given chunk. It simply polls chunkservers for that information at startup. The master can keep itself up-to-data thereafter because it controls all chunk placement and monitors chunkserver status with regular HeartBeat messages.

We initially attempted to keep chunk location information persistently at the master, but we decided that it was much simpler to request the data from chunkservers at startup, and periodically thereafter. This eliminated the problem of keeping the master and chunkservers in sync as chunkservers join and leave the cluster, change names, fail, restart, and so on. In a cluster with hundreds of servers, these events all too often.

Another way to understand this design is to realize that a chunkserver has the final word over what chunks it does or does not have on its own disks. There is no point in trying to maintain a consistent view of this information on the master because errors on a chunkserver may cause chunks to vanish spontaneously (e.g., a disk may go bad and be disabled) or an operator may rename a chunkserver.

2.6.3 Operation Log

The operation log contains a historical record of critical metadata changes. It is central to GFS. Not only is it the only persistent record of metadata, but it also serves as a logical time line that defines the order of concurrent operations. Files and chunks, as well as their versions (see Section 4.5), are all uniquely and eternally identified by the logical times at which they were created.

Since the operation log is critical, we must store it reliably and not make changes visible to clients until metadata changes are made persistent. Otherwise, we efficiently lose the whole file system or recent client operations even if the chunks themselves survive. Therefore, we replicate it on multiple remote machines and respond to a client operation only after flushing the corresponding log record to disk both locally and remotely. The master batches several log records together before flushing thereby reducing the impact of flushing and replication on overall system throughput.

The master recovers its file system state by replaying the operation log. To minimize startup time, we must keep the log small. The master checkpoints its state whenever the log grows beyond a certain size so that it can recover by loading the latest checkpoint from local disk and replaying only the limited number of log records after that. The checkpoint is in compact B-tree like from that can be directly mapped into memory and used for namespace lookup without extra parsing. This further speeds up recovery and improves availability.

Because building a checkpoint can take a while, the master’s internal state is structured in such a way that a new checkpoint can be created without delaying incoming mutations. The master switches to a new log file and creates the new checkpoint in a separated thread. The new checkpoint includes all mutations before the switch. It can be created in a minute or so for a cluster with a few million files. When completed, it is written to disk both locally and remotely.

Recovery needs only the latest complete checkpoint and subsequent log files. Older checkpoints and log files can be freely deleted, though we keep a few around to guard against catastrophes. A failure during checkpointing does not affect correctness because the recovery code detects and skips incomplete checkpoints.

2.7 Consistency Model

GFS has a relaxed consistency model that supports our highly distributed applications well but remains relatively simple and efficient to implement.

2.7.1 Guarantees by GFS

File namespaces mutations (e.g., file creation) are atomic. They are handled exclusively by the master: namespace locking guarantees atomicity and correctness (Section 4.1); the master’s operation log defines a global total order of these operations (Section 2.6.3).

The state of a file region after a data mutation depends on the type of mutation, whether it succeeds or fails, and whether there are concurrent mutations. Table 1 summarizes the result. A file region is consistent if all clients will always see the same data, regardless of which replicas they read from. A region is defined after a file data mutation if it consistent and clients will see what the mutation writes in its entirety. When a mutation succeeds without interference from concurrent writers, the affected region is defined (and by implication consistent): all clients will always see what the mutation has written. Concurrent successful mutations leave the region undefined but consistent: all clients see the same data, but it may not reflect what any one mutation has written. Typically, it consists of mingled fragments from multiple mutations. A failed mutation makes the region inconsistent (hence also undefined): different clients may see different data at different times. We describe below how our applications can distinguish defined regions from undefined regions. The applications do not need to further distinguish between different kinds of undefined regions.

Data mutations may be writes or record appends. A write causes data to be written at an application-specified file offset. A record append causes data (the “record”) to be appended atomically at least once even in the presence of concurrent mutations, but at an offset of GFS’s choosing (Section 3.3). (In contrast, a “regular” append is merely a write at an offset that the client believes to be the current end of file.) The offset is returned to the client and marks the beginning of a defined region that contains the record. In addition, GFS may insert padding or record duplicates in between. They occupy regions considered to be inconsistent and are typically dwarfed by the amount of user data.

After a sequence of successful mutations, the mutated file region is guaranteed to be defined and contain the data written by the last mutation. GFS achieves this by (a) applying mutations to a chunk in the same order on all its replicas (Section 3.1), and (b) using chunk version numbers to detect any replica that has become stable because it has missed mutations while its chunkserver was down (Section 4.5). Stale replicas will never be involved in a mutation or given to clients asking the master for chunk locations. They are garbage collected at the earliest opportunity.

Since clients cache chunk locations, they may read from a stale replica before that information is refreshed. This window is limited by the cache entry’s timeout and the next open of the file, which purges from the cache all chunk information for that file. Moreover, as most of our files are append-only, a stale replica usually returns a premature end of chunk rather than outdated data. When a reader retries and contacts the master, it will immediately get current chunk locations.

Long after a successful mutation, component failures can of course still corrupt or destroy data. GFS identifies failed chunkservers by regular handshakes between master and all chunkservers and detects data corruption by checksumming (Section 5.2). Once a problem surfaces, the data is restored from valid replicas as soon as possible (Section 4.3). A chunk is lost irreversibly only if all its replicas are lost before GFS can react, typically within minutes. Even in this case, it becomes unavailable, not corrupted: applications receive clear errors rather than corrupt data.

2.7.2 Implications for Applications

GFS applications can accommodate the relaxed consistency model with a few simple techniques already needed for other purposes: relying on appends rather than overwrites, checkpointing, and writing self-validating, self-identifying records.

Practically all our applications mutate files by appending rather than overwriting. In one typical use, a writer generates a file from beginning to end. It atomically renames the file to a permanent name after writing all the data, or periodically checkpoints how much has been successfully written. Checkpoints may also include application-level checksums. Readers verify and process only the file region up to the last checkpoint, which is known to be in the defined state. Regardless of consistency and concurrency issues, this approach has served us well. Appending is far more efficient and more resilient to application failures than random writes. Checkpointing allows writers to restart incrementally and keeps readers from processing successfully written file data that is still incomplete from the application’s perspective.

In the other typical use, many writers concurrently append to a file for merged results or as a producer-consumer queue. Record append’s append-at-least-once semantics preserves each writer’s output. Readers deal with the occasional padding and duplicates as follows. Each record prepared by the writer contains extra information like checksums so that its validity can be verified. A reader can identify and discard extra padding and record fragments using the checksums. If it cannot tolerate the occasional duplicates (e.g., if they would trigger non-idempotent operations), it can filter them out using unique identifiers in the records, which are often needed anyway to name corresponding application entities such as web documents. These functionalities for record I/O (except duplicate removal) are in library code shared by our applications and applicable to other file interface implementations at Google. With that, the same sequence of records, plus rare duplicates, is always delivered to the record reader.