OpenYurt Raven 跨物理区域通信

Dec 9, 2023 15:00 · 2769 words · 6 minute read

在 OpenYurt 中,使用 Raven 组件来实现跨物理区域(节点)的 Pod 之间通信。

Kubernetes 集群部署 OpenYurt 时,保留原先的 CNI 网络插件,因为 OpenYurt Raven 能够做到劫持原先跨节点的流量,使用 VPN 隧道代替原先的 VXLAN 或 IPIP 隧道来实现跨物理区域的节点通信。

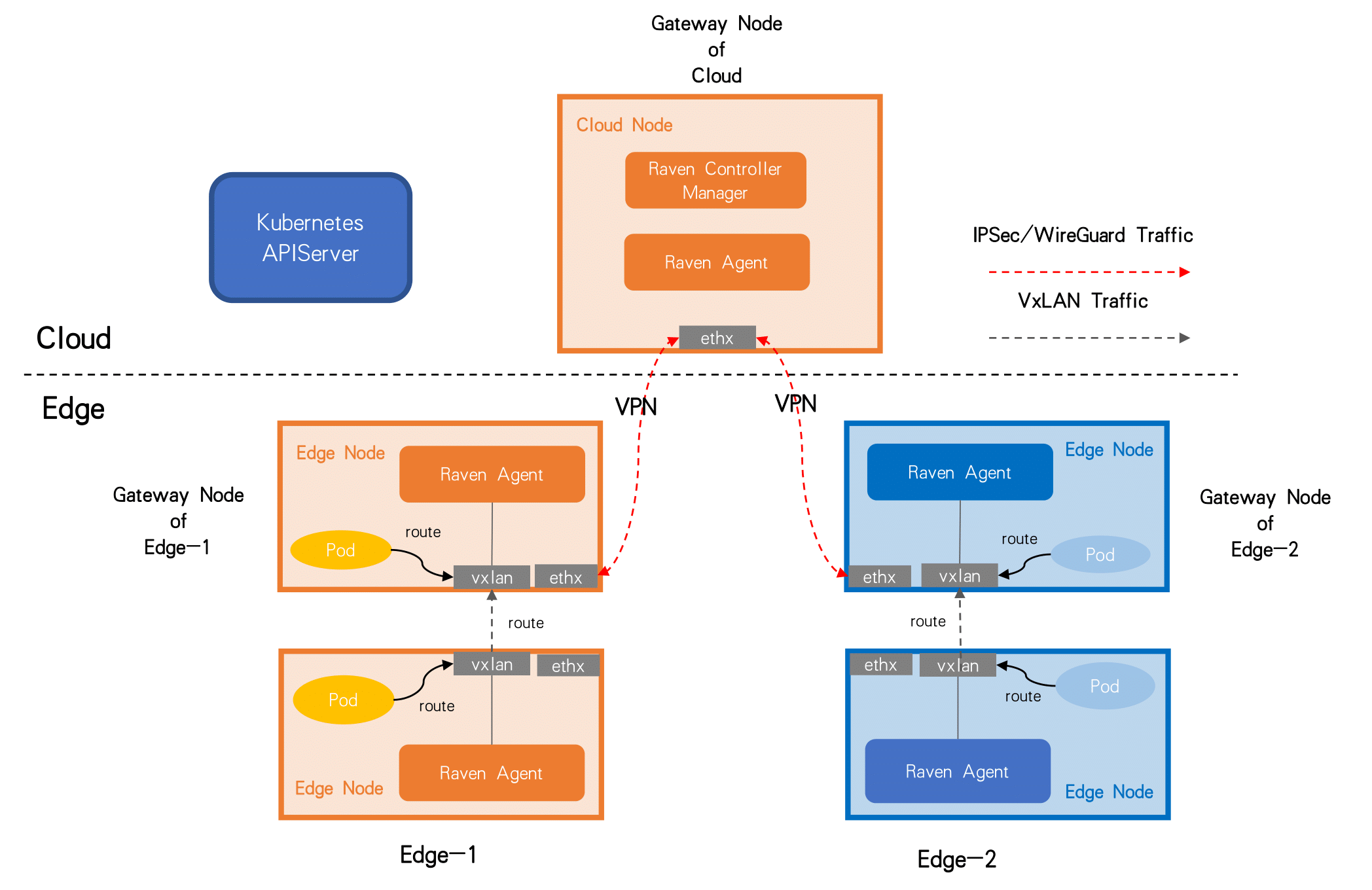

OpenYurt Raven 架构

主要包含两个组件:

- Raven Controller Manager:以 Deployment 形式部署的 Kubernetes 控制器,监控边缘节点状态并为每个边缘节点池(物理区域)中选出一个合适的节点作为 Gateway Node,所有跨物理区域的流量都由该节点转发

- Raven Agent:以 DaemonSet 形式部署,集群中每节点一个,根据每个节点的角色配置路由或 VPN 隧道信息

Raven IPSec 通信原理

先剧透一下,Raven 默认使用 Libreswan 作为 VPN 软件,而 Libreswan 基于 IPSec 隧道。

示例集群有 3 个节点,网络插件为 flannel,流量跨节点走 VXLAN 隧道:

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

edge-node-1 Ready <none> 23d v1.22.3 10.0.0.210 <none> Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.13.1.el8_6.x86_64 containerd://1.6.24

edge-node-2 Ready <none> 17d v1.22.3 10.0.0.80 <none> Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.13.1.el8_6.x86_64 containerd://1.6.24

yurt-cloud Ready control-plane,master,worker 24d v1.22.3 172.20.163.65 <none> Rocky Linux 8.9 (Green Obsidian) 4.18.0-477.27.1.el8_8.x86_64 containerd://1.6.4

$ kubectl get po -n kube-system -o wide | grep raven-agent-ds

raven-agent-ds-h27kh 1/1 Running 0 16d 172.20.163.65 yurt-cloud <none> <none>

raven-agent-ds-js2lz 1/1 Running 0 16d 10.0.0.80 edge-node-2 <none> <none>

raven-agent-ds-wnsnc 1/1 Running 0 16d 10.0.0.210 edge-node-1 <none> <none>

$ kubectl get po -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-9ffgd 1/1 Running 0 24d

kube-flannel-ds-nw2ds 1/1 Running 0 23d

kube-flannel-ds-rp4hl 1/1 Running 0 17d

$ ethtool -i flannel.1

driver: vxlan

version: 0.1

firmware-version:

expansion-rom-version:

bus-info:

supports-statistics: no

supports-test: no

supports-eeprom-access: no

supports-register-dump: no

supports-priv-flags: no

yurt-cloud 与 edge-node-n 节点位于不同物理区域,无法直接通信:

$ ping 10.0.0.80

PING 10.0.0.80 (10.0.0.80) 56(84) bytes of data.

^C

--- 10.0.0.80 ping statistics ---

14 packets transmitted, 0 received, 100% packet loss, time 13348ms

ping 10.0.0.210

PING 10.0.0.210 (10.0.0.210) 56(84) bytes of data.

^C

--- 10.0.0.210 ping statistics ---

5 packets transmitted, 0 received, 100% packet loss, time 4132ms

但是我们却能够在 yort-cloud 节点上正常访问落在 edge-node-1 节点上的 nginx 容器:

$ kubectl get po -o wide | grep nginx

nginx 1/1 Running 0 15d 10.233.68.7 edge-node-1 <none> <none>

$ curl -i http://10.233.68.7

HTTP/1.1 200 OK

Server: nginx/1.14.2

Date: Thu, 07 Dec 2023 09:43:43 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 04 Dec 2018 14:44:49 GMT

Connection: keep-alive

ETag: "5c0692e1-264"

Accept-Ranges: bytes

因为 yurt-cloud 所在的物理区域就它一个节点,所以网关节点理所应当就是它自己:

$ kubectl get gw gw-cloud -o jsonpath='{.status}' | jq

{

"activeEndpoints": [

{

"config": {

"enable-l3-tunnel": "true"

},

"nodeName": "yurt-cloud",

"port": 4500,

"publicIP": "172.20.163.65",

"type": "tunnel"

}

],

"nodes": [

{

"nodeName": "yurt-cloud",

"privateIP": "172.20.163.65",

"subnets": [

"10.233.64.0/24"

]

}

]

}

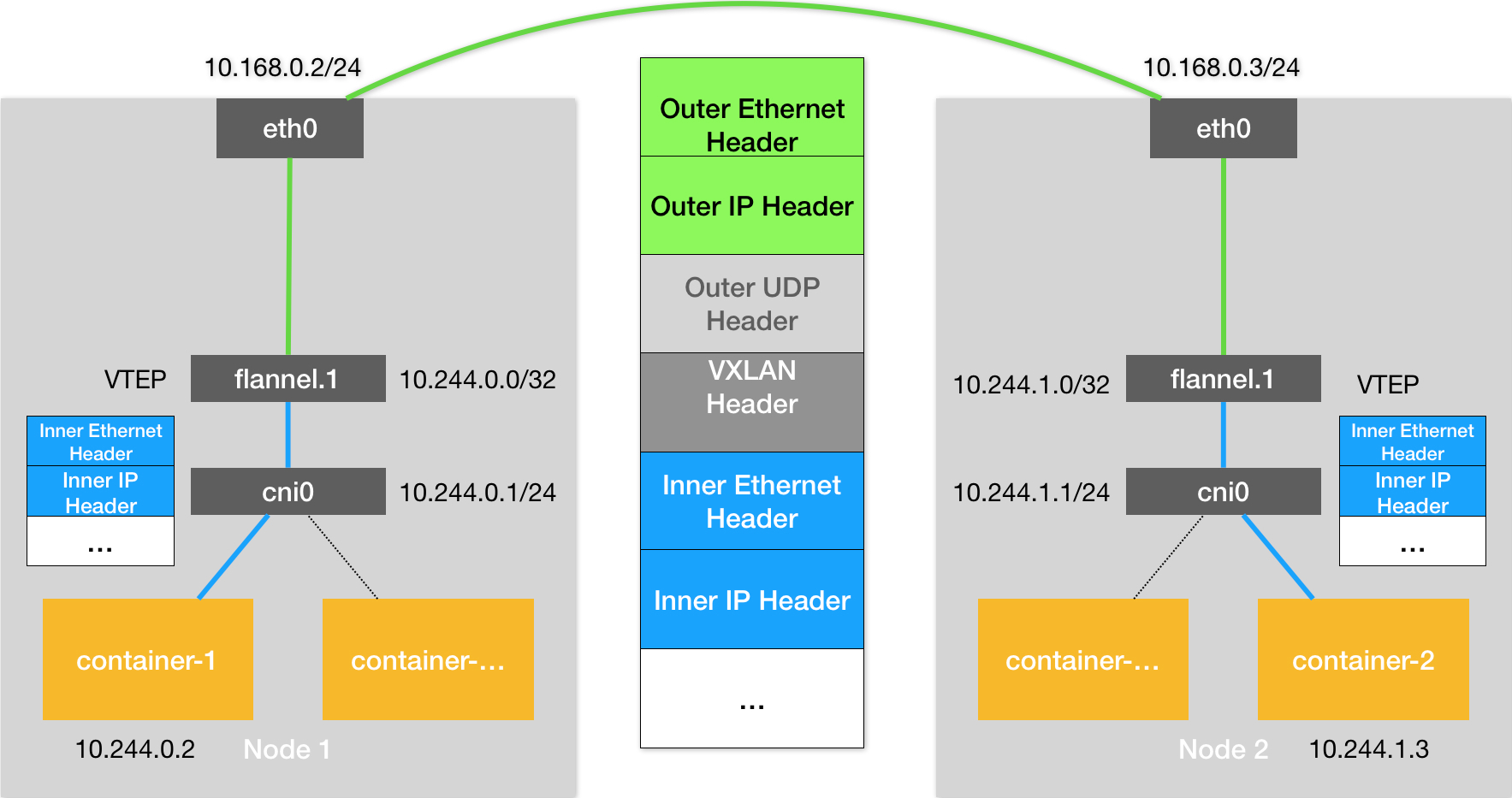

flannel CNI VXLAN 模式如下图:

如果 yurt-cloud 和 edge-node-n 在同一物理区域中(二层可达),在 yurt-cloud 节点通过 curl 访问 edge-node-1 节点的 nginx Pod:

- TCP 数据包首先来到 yurt-cloud 节点上的 flannel.1 VTEP 设备

- 封包走 VXLAN 隧道来到 edge-node-1 节点上的 flannel.1 设备

- 在 edge-node-1 节点上解封 VXLAN 包释放出原来的 TCP 数据包

- 数据包根据路由来到 edge-node-1 节点上的 cni0 网桥,转发至 nginx Pod 中

而实际上(部署了 Raven 的 Kubernetes 集群中),TCP 数据包到达 yurt-cloud 节点上的 flannel.1 VTEP 设备前就会被劫持

我们在 yurt-cloud 节点访问 edge-node-1 节点的 nginx Pod 的同时抓包:

$ tcpdump -nne -i any tcp and host 10.233.68.7 and port 80

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked v1), capture size 262144 bytes

10:33:15.740815 In 78:2c:29:fe:2a:46 ethertype IPv4 (0x0800), length 76: 10.233.68.7.80 > 10.233.64.0.47322: Flags [S.], seq 3602181374, ack 1858373815, win 27960, options [mss 1410,sackOK,TS val 3744027371 ecr 2615033249,nop,wscale 7], length 0

10:33:15.742060 In 78:2c:29:fe:2a:46 ethertype IPv4 (0x0800), length 68: 10.233.68.7.80 > 10.233.64.0.47322: Flags [.], ack 76, win 219, options [nop,nop,TS val 3744027373 ecr 2615033251], length 0

10:33:15.743899 In 78:2c:29:fe:2a:46 ethertype IPv4 (0x0800), length 306: 10.233.68.7.80 > 10.233.64.0.47322: Flags [P.], seq 1:239, ack 76, win 219, options [nop,nop,TS val 3744027375 ecr 2615033251], length 238: HTTP: HTTP/1.1 200 OK

10:33:15.744384 In 78:2c:29:fe:2a:46 ethertype IPv4 (0x0800), length 680: 10.233.68.7.80 > 10.233.64.0.47322: Flags [P.], seq 239:851, ack 76, win 219, options [nop,nop,TS val 3744027375 ecr 2615033251], length 612: HTTP

10:33:15.745554 In 78:2c:29:fe:2a:46 ethertype IPv4 (0x0800), length 68: 10.233.68.7.80 > 10.233.64.0.47322: Flags [F.], seq 851, ack 77, win 219, options [nop,nop,TS val 3744027376 ecr 2615033255], length 0

使用 tcpdump 只能抓到从 nginx 回来的包,但却没有去向 nginx 的包。

同时在 yurt-cloud 节点抓取 VXLAN 包(需要将目的 IP 地址 10.233.68.7 转换为十六进制 0x0ae94407):

$ tcpdump -i eth0 -nv udp[46:4]=0x0ae94407

并无任何有效输出,说明跨节点的数据包确实没走 VXLAN 隧道。

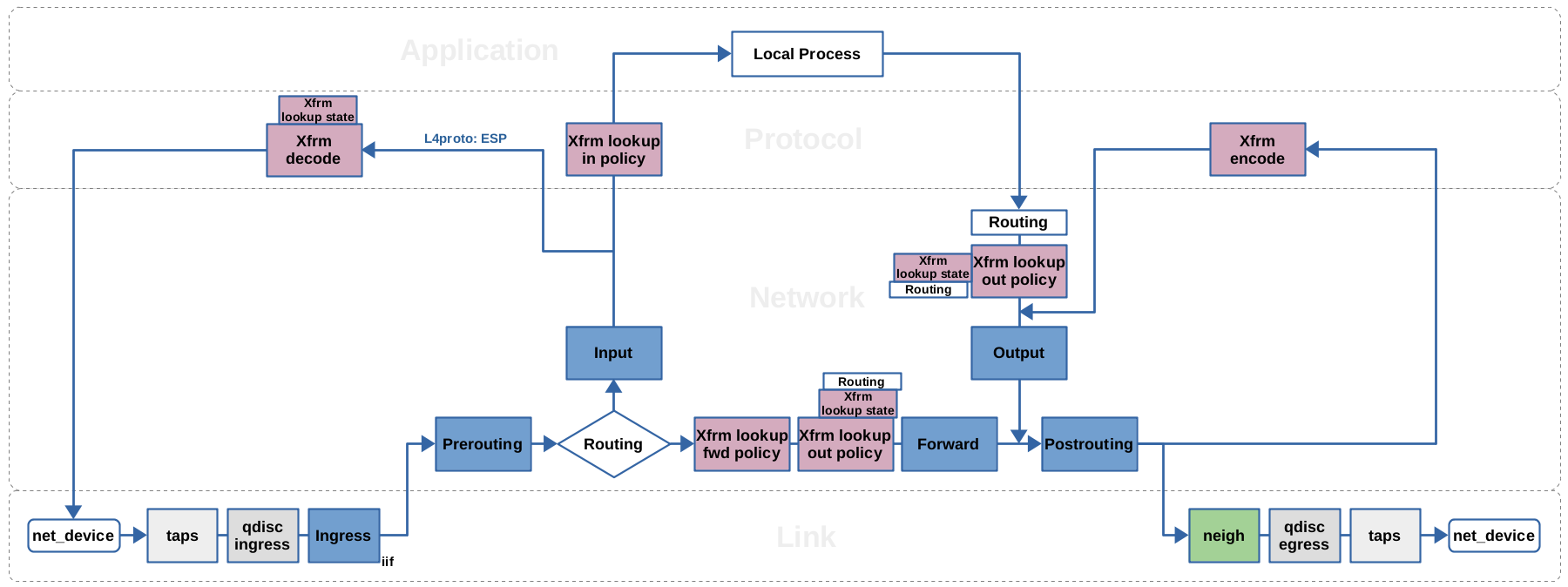

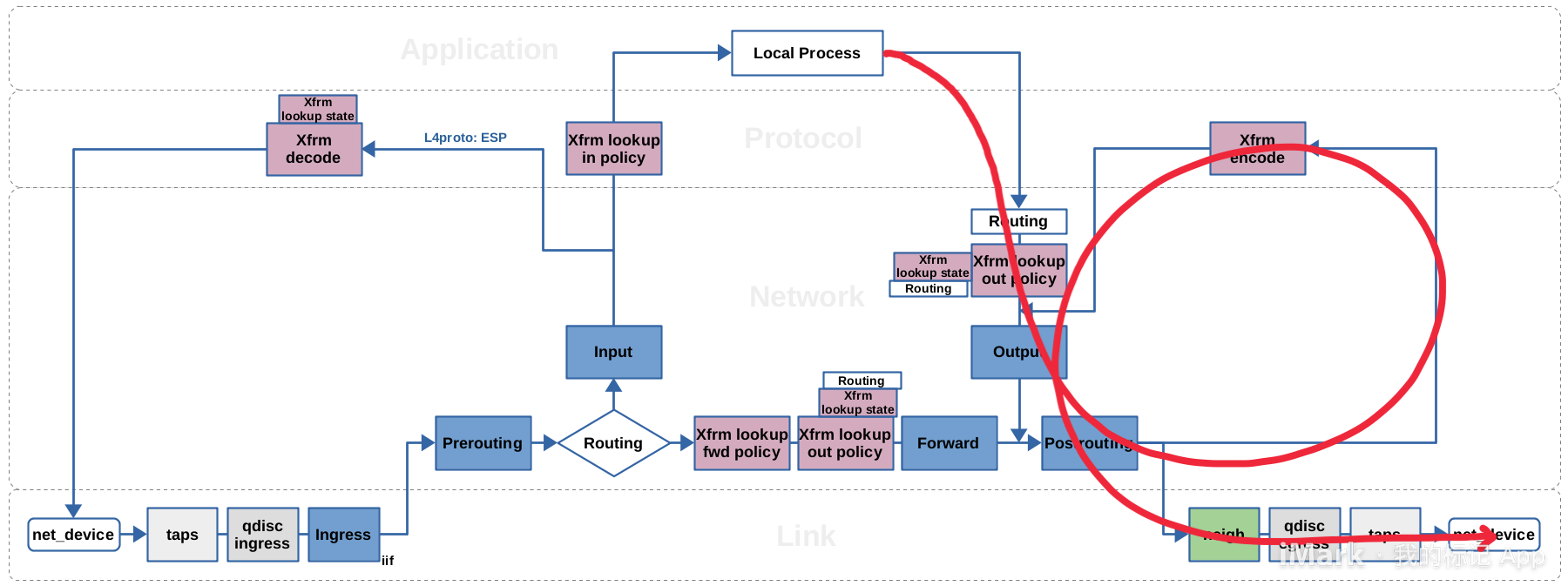

这张经典的 Netfilter 网络包流向图,虽然也覆盖了 XFRM 框架,其中列举了四种不同的 XFRM 操作:

- xfrm/socket lookup

- xfrm decode

- xfrm lookup

- xfrm encode

但 XFRM 实际上不太一样,得用另一张图:

根据上图来分析一下 TCP 数据包的流向:

-

在 curl 进程中 HTTP 属于应用层协议(七层),通过 socket 来到 Linux 内核中被封为 TCP 数据包(传输层)

-

TCP 数据包在内核中来到网络层,被封成 IP 数据包

-

路由表似乎在 XFRM 框架中不起作用,常规情况下数据包将命中

10.233.68.0/24 via 10.233.68.0 dev flannel.1 onlink这条路由前往 flannel.1 设备$ ip r default via 172.20.163.1 dev eth0 proto dhcp src 172.20.163.65 metric 100 10.233.64.0/24 dev cni0 proto kernel scope link src 10.233.64.1 10.233.65.0/24 via 10.233.65.0 dev flannel.1 onlink 10.233.68.0/24 via 10.233.68.0 dev flannel.1 onlink 169.254.169.254 via 172.20.163.2 dev eth0 proto dhcp src 172.20.163.65 metric 100 172.20.163.0/24 dev eth0 proto kernel scope link src 172.20.163.65 metric 100 -

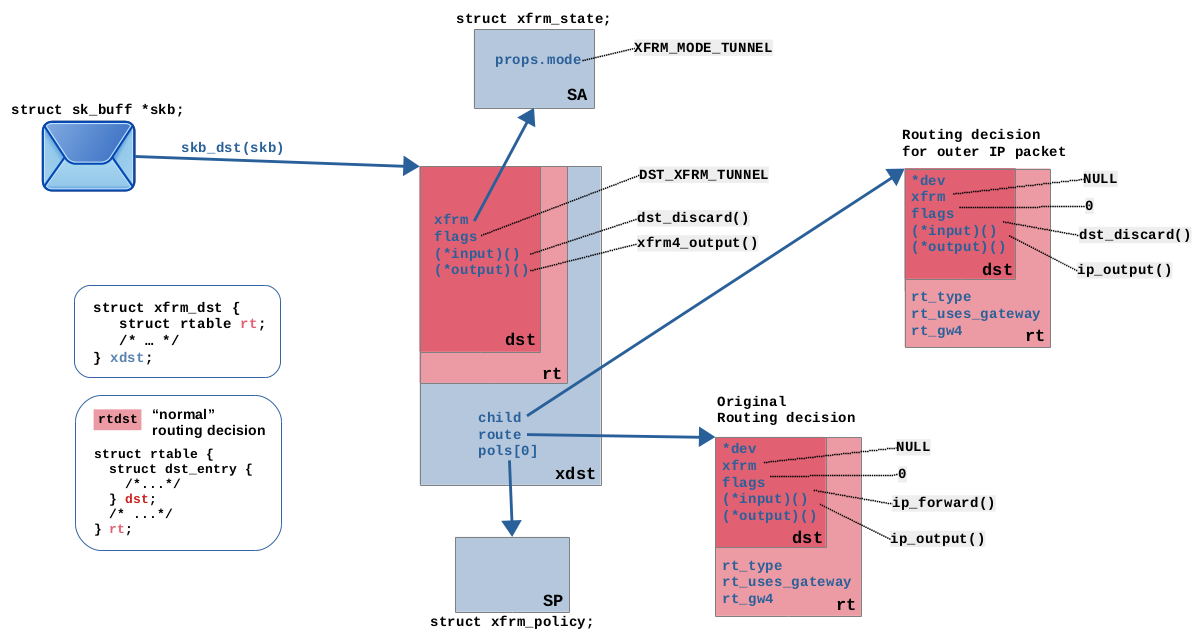

但在路由查找后,还会执行 xfrm lookup:XFRM 框架对 IPSec SPD(Security Policy Database)执行一次查找,搜寻匹配的输出策略

$ ip xfrm policy src 10.233.68.0/24 dst 10.233.64.0/24 dir fwd priority 1757392 ptype main tmpl src 172.20.150.183 dst 172.20.163.65 proto esp reqid 16393 mode tunnel src 10.233.68.0/24 dst 10.233.64.0/24 dir in priority 1757392 ptype main tmpl src 172.20.150.183 dst 172.20.163.65 proto esp reqid 16393 mode tunnel -

策略存在

$ ip xfrm state ip xfrm state src 172.20.150.183 dst 172.20.163.65 proto esp spi 0x50ed6730 reqid 16393 mode tunnel replay-window 0 flag af-unspec aead rfc4106(gcm(aes)) 0x60477cec727a57ceaa94b2c33122e2aea982d61fa012006a264a3d7f2a8551d8e2ddb44e 128 encap type espinudp sport 4500 dport 4500 addr 0.0.0.0 anti-replay esn context: seq-hi 0x0, seq 0xfd, oseq-hi 0x0, oseq 0x0 replay_window 128, bitmap-length 4 ffffffff ffffffff ffffffff ffffffff src 172.20.163.65 dst 172.20.150.183 proto esp spi 0x14026eea reqid 16393 mode tunnel replay-window 0 flag af-unspec aead rfc4106(gcm(aes)) 0x2319f92d34cfbf4b6976f129acc72d7b70856f6d83e5f007f66c1162cf49ea9295b995dc 128 encap type espinudp sport 4500 dport 4500 addr 0.0.0.0 anti-replay esn context: seq-hi 0x0, seq 0x0, oseq-hi 0x0, oseq 0x447 replay_window 128, bitmap-length 4 00000000 00000000 00000000 00000000此处 172.20.150.183 是 edge-node-1 节点的浮动 IP

SA 指定了隧道模式,XFRM 结构会被挂载到网络包(skb)上,包含了两条路由决策,指向 IPSec 的 SA 和 SP,将包导向 XFRM 加密和封装的路径,和普通的路由决策是不一样的。

-

xfrm encode:数据包流过 Netfilter Postrouting 钩子后,进入 VPN 隧道后被加密和封装。XFRM 框架根据挂载至数据包的函数指针来转换。对于 IPv4 包来说,这个入口函数是

xfrm4_output。封包完成后,挂载至数据包上的 XFRM 相关信息会被移除,只留下外层 IP 包的路由决策,目的 IP 172.20.150.183 将根据默认路由从 eth0 网卡出节点。$ ip r default via 172.20.163.1 dev eth0 proto dhcp src 172.20.163.65 metric 100 10.233.64.0/24 dev cni0 proto kernel scope link src 10.233.64.1 10.233.65.0/24 via 10.233.65.0 dev flannel.1 onlink 10.233.68.0/24 via 10.233.68.0 dev flannel.1 onlink 169.254.169.254 via 172.20.163.2 dev eth0 proto dhcp src 172.20.163.65 metric 100 172.20.163.0/24 dev eth0 proto kernel scope link src 172.20.163.65 metric 100

Raven Agent

继续来看 Raven Agent 是如何根据 Gateway 对象来配置 VPN 隧道的。

启动 raven-agent 守护进程后首先将根据配置文件初始化 route driver 和 VPN driver:

func Run(ctx context.Context, cfg *config.CompletedConfig) error {

routeDriver, err := routedriver.New(cfg.RouteDriver, cfg.Config)

if err != nil {

return fmt.Errorf("fail to create route driver: %s, %s", cfg.RouteDriver, err)

}

err = routeDriver.Init()

if err != nil {

return fmt.Errorf("fail to initialize route driver: %s, %s", cfg.RouteDriver, err)

}

klog.Infof("route driver %s initialized", cfg.RouteDriver)

vpnDriver, err := vpndriver.New(cfg.VPNDriver, cfg.Config)

if err != nil {

return fmt.Errorf("fail to create vpn driver: %s, %s", cfg.VPNDriver, err)

}

err = vpnDriver.Init()

if err != nil {

return fmt.Errorf("fail to initialize vpn driver: %s, %s", cfg.VPNDriver, err)

}

klog.Infof("VPN driver %s initialized", cfg.VPNDriver)

// a lot of code here

}

-

有且只有一个 VXLAN route dirver

func (vx *vxlan) Init() (err error) { vx.iptables, err = iptablesutil.New() if err != nil { return err } vx.ipset, err = ipsetutil.New(ravenMarkSet) if err != nil { return err } return } -

VPN driver 根据配置文件为 libreswan,还有一种 WireGuard 可选

$ kubectl get cm raven-agent-config -n kube-system -o yaml | grep vpn-driver vpn-driver: libreswanfunc (l *libreswan) Init() error { // Ensure secrets file _, err := os.Stat(SecretFile) if err == nil { if err := os.Remove(SecretFile); err != nil { return err } } file, err := os.Create(SecretFile) if err != nil { klog.Errorf("fail to create secrets file: %v", err) return err } defer file.Close() psk := vpndriver.GetPSK() fmt.Fprintf(file, "%%any %%any : PSK \"%s\"\n", psk) return l.runPluto() }

raven-agent 本身也是一个 Kubernetes 控制器,监听集群中的 Gateway 事件:

func NewEngineController(nodeName string, forwardNodeIP bool, routeDriver routedriver.Driver, manager manager.Manager,

vpnDriver vpndriver.Driver) (*EngineController, error) {

ctr := &EngineController{

nodeName: nodeName,

forwardNodeIP: forwardNodeIP,

queue: workqueue.NewRateLimitingQueue(workqueue.DefaultControllerRateLimiter()),

routeDriver: routeDriver,

manager: manager,

vpnDriver: vpnDriver,

}

err := ctrl.NewControllerManagedBy(ctr.manager).

For(&v1alpha1.Gateway{}, builder.WithPredicates(predicate.Funcs{

CreateFunc: ctr.addGateway,

UpdateFunc: ctr.updateGateway,

DeleteFunc: ctr.deleteGateway,

})).

Complete(reconcile.Func(func(ctx context.Context, req reconcile.Request) (reconcile.Result, error) {

return reconcile.Result{}, nil

}))

if err != nil {

klog.ErrorS(err, "failed to new raven agent controller with manager")

}

ctr.ravenClient = ctr.manager.GetClient()

return ctr, nil

}

当用户创建、更新 Gateway 对象,会触发控制器去调谐,根据 Gateway 的定义在节点上配置 VPN:

func (c *EngineController) sync() error {

// a lot of code here

klog.InfoS("applying network", "localEndpoint", nw.LocalEndpoint, "remoteEndpoint", nw.RemoteEndpoints)

err = c.vpnDriver.Apply(nw, c.routeDriver.MTU)

if err != nil {

return err

}

err = c.routeDriver.Apply(nw, c.vpnDriver.MTU)

if err != nil {

return err

}

// ...

}

VPN 的实现是 librewan,其对应的 Apply 方法在 https://github.com/openyurtio/raven/blob/45f2d1c87c2100d691cba05d216f55e7959b3f82/pkg/networkengine/vpndriver/libreswan/libreswan.go:

func (l *libreswan) Apply(network *types.Network, routeDriverMTUFn func(*types.Network) (int, error)) (err error) {

// a lot of code here

for name, connection := range desiredConnections {

err := l.connectToEndpoint(name, connection)

errList = errList.Append(err)

}

}

func (l *libreswan) connectToEndpoint(name string, connection *vpndriver.Connection) errorlist.List {

errList := errorlist.List{}

if _, ok := l.connections[name]; ok {

klog.InfoS("skipping connect because connection already exists", "connectionName", name)

return errList

}

err := l.whackConnectToEndpoint(name, connection)

if err != nil {

errList = errList.Append(err)

klog.ErrorS(err, "error connect connection", "connectionName", name)

return errList

}

l.connections[name] = connection

return errList

}

拼接命令参数,调用镜像中的 /usr/libexec/ipsec/whack 二进制可执行文件来设置 libreswan VPN,进而配置 IPSec 隧道。

最后再来看一下 router driver 的 Apply 方法,在 https://github.com/openyurtio/raven/blob/a529a600347b73df35beeedbe524a652a7735014/pkg/networkengine/routedriver/vxlan/vxlan.go 文件中:

func (vx *vxlan) Apply(network *types.Network, vpnDriverMTUFn func() (int, error)) (err error) {

if network.LocalEndpoint == nil || len(network.RemoteEndpoints) == 0 {

klog.Info("no local gateway or remote gateway is found, cleaning up route setting")

return vx.Cleanup()

}

if len(network.LocalNodeInfo) == 1 {

klog.Infof("only gateway node exist in current gateway, cleaning up route setting")

return vx.Cleanup()

}

// a lot of code here

}

而根据 raven-agent 的日志:

I1121 07:54:31.247236 1 vxlan.go:81] Tunnel: only gateway node exist in current gateway, cleaning up route setting

route driver 并没有往下去执行一些 iptable 和 route 相关的配置。