OpenYurt IPVS & IPSec 排查

Jan 16, 2024 23:45 · 2455 words · 5 minute read

一句话描述问题现象:OpenYurt 环境宿主机网络命名空间内能够通过 Pod IP 跨物理区域访问服务(走 IPSec 隧道),但无法通过其 Service 的 Cluster IP 访问。

以 nginx 为例,其 Pod 落在 edge-node-1节点(与 yurt-cloud 节点在不同的物理区域中)上,其 Pod IP 为 10.233.68.67,Cluster IP 为 10.255.31.243:

$ kubectl get po -o wide | grep nginx

nginx-deployment-69ff754794-46fth 1/1 Running 0 5h55m 10.233.68.67 edge-node-1 <none> <none>

$ kubectl get svc | grep nginx

nginx-service ClusterIP 10.255.31.243 <none> 80/TCP 5h53m

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

edge-node-1 Ready <none> 27d v1.22.3

edge-node-2 Ready <none> 21d v1.22.3

yurt-cloud Ready control-plane,master,worker 28d v1.22.3

在 yurt-cloud 节点上使用 telnet 通过 Cluster IP 10.255.31.243 无法建立 TCP 连接;但 Pod IP 10.233.68.67 却可以。

猜测:通过 Pod IP 和 Cluster IP 分别访问 nginx Pod,在 IPVS DNAT 后两者包与路径完全一致,应该不是在跨节点的路径中丢包,IPVS 本身有问题。

排查

telnet 10.255.31.243 80 的同时在 your-cloud 节点上和 nginx Pod 网络命名空间内同时抓包得知:

- TCP SYN 数据包通过隧道顺利到达 edge-node-1 节点并出现在 redis Pod eth0 网卡(veth)上

- nginx 服务收到 SYN 后应答 SYN ACK TCP 包

- SYN ACK TCP 包通过 IPSec 隧道顺利回到 yurt-cloud 节点并出现在 eth0 网卡上(已解封)

- 但 telnet 并未进入成功建立连接状态

该 Kubernetes 环境中 kube-proxy 使用 IPVS 来将 Cluster IP DNAT 成 Pod IP:

$ ipvsadm -L -n -t 10.255.31.243:80

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.255.31.243:80 rr

-> 10.233.68.67:80 Masq 1 0 0

telnet 的同时观察 conntrack 状态与 telnet 本身连接状态:

$ conntrack -E -o ktimestamp | grep 10.255.31.243

[NEW] tcp 6 120 SYN_SENT src=10.255.31.243 dst=10.255.31.243 sport=57232 dport=80 [UNREPLIED] src=10.233.68.67 dst=10.233.64.0 sport=80 dport=16167

[UPDATE] tcp 6 60 SYN_RECV src=10.255.31.243 dst=10.255.31.243 sport=57232 dport=80 src=10.233.68.67 dst=10.233.64.0 sport=80 dport=16167

[UPDATE] tcp 6 60 SYN_RECV src=10.255.31.243 dst=10.255.31.243 sport=57232 dport=80 src=10.233.68.67 dst=10.233.64.0 sport=80 dport=16167

[UPDATE] tcp 6 60 SYN_RECV src=10.255.31.243 dst=10.255.31.243 sport=57232 dport=80 src=10.233.68.67 dst=10.233.64.0 sport=80 dport=16167

[UPDATE] tcp 6 60 SYN_RECV src=10.255.31.243 dst=10.255.31.243 sport=57232 dport=80 src=10.233.68.67 dst=10.233.64.0 sport=80 dport=16167

[UPDATE] tcp 6 60 SYN_RECV src=10.255.31.243 dst=10.255.31.243 sport=57232 dport=80 src=10.233.68.67 dst=10.233.64.0 sport=80 dport=16167

$ netstat -ant | grep 10.255.31.243

tcp 0 1 10.255.31.243:57232 10.255.31.243:80 SYN_SENT

- NAT 时源端口改变(57232 -> 16167),在 nginx Pod 网络命名空间内同时抓包也能看到

- conntrack 由 SYN_SENT 更新至 SYN_RECV 状态

- telnet 的 TCP 连接仍处于 SYN_SENT 状态

已知 TCP 连接状态会随着三次握手以 SYN_SENT -> SYN_RECV -> ESTABLISHED 的顺序依次更新 SYN_SENT 表示连接只在一个方向发送了初始 SYN TCP 包,还未看到应答的 SYN ACK 包 SYN_RECV 表示收到 SYN ACK 包 ESTABLISHED 表示三次握手完成,TCP 连接已建立

由上述信息推断,nginx 应答的 SYN ACK 包成功到达 yurt-cloud 节点的 conntrack,但并未到达 telnet。

因为 OpenYurt 中跨物理区域节点使用的是 IPSec 隧道,在 Linux 内核中基于 XFRM 框架实现,我们同时观察到 /proc/net/xfrm_stat 中的 XfrmInNoPols 计数随着 telnet 尝试次数增加:

$ watch 'awk "NR=12" /proc/net/xfrm_stat'

Every 2.0s: awk "NR=12" /proc/net/xfrm_stat yurt-cloud: Mon Oct 16 16:43:27 2023

XfrmInError 0

XfrmInBufferError 0

XfrmInHdrError 0

XfrmInNoStates 0

XfrmInStateProtoError 0

XfrmInStateModeError 0

XfrmInStateSeqError 0

XfrmInStateExpired 0

XfrmInStateMismatch 0

XfrmInStateInvalid 0

XfrmInTmplMismatch 0

XfrmInNoPols 171

XfrmInPolBlock 0

XfrmInPolError 0

XfrmOutError 0

XfrmOutBundleGenError 0

XfrmOutBundleCheckError 0

XfrmOutNoStates 0

XfrmOutStateProtoError 0

XfrmOutStateModeError 0

XfrmOutStateSeqError 0

尝试找出丢包点,编写脚本并发 telnet,并利用 dropwatch 观察:

$ dropwatch -l kas

Initalizing kallsyms db

dropwatch> start

Enabling monitoring...

Kernel monitoring activated.

Issue Ctrl-C to stop monitoring

16 drops at ip_rcv+11b (0xffffffffb04cb47b)

5 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

11 drops at skb_queue_purge+18 (0xffffffffb0412258)

2 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

2 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

34 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

2 drops at skb_queue_purge+18 (0xffffffffb0412258)

2 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

2 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

2 drops at skb_queue_purge+18 (0xffffffffb0412258)

2 drops at ip_rcv+11b (0xffffffffb04cb47b)

2 drops at unix_stream_connect+2f0 (0xffffffffb0557ca0)

4 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

7 drops at skb_queue_purge+18 (0xffffffffb0412258)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

3 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

3 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

7 drops at skb_queue_purge+18 (0xffffffffb0412258)

2 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

13 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

3 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at ip6_mc_input+1e6 (0xffffffffb05608f6)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

3 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

182 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

2 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

2 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

14 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

2 drops at ip_rcv+11b (0xffffffffb04cb47b)

13 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

5 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

5 drops at skb_queue_purge+18 (0xffffffffb0412258)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

1 drops at skb_queue_purge+18 (0xffffffffb0412258)

19 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

93 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

5 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

2 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

7 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

5 drops at ip_rcv+11b (0xffffffffb04cb47b)

21 drops at ip_rcv+11b (0xffffffffb04cb47b)

209 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

5 drops at ip_rcv+11b (0xffffffffb04cb47b)

2 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

2 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at ip_rcv+11b (0xffffffffb04cb47b)

53 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

2 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

15 drops at ip_rcv+11b (0xffffffffb04cb47b)

3 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

33 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

203 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

2 drops at skb_queue_purge+18 (0xffffffffb0412258)

2 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

1 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

2 drops at skb_queue_purge+18 (0xffffffffb0412258)

104 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

3 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

6 drops at skb_queue_purge+18 (0xffffffffb0412258)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

13 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

4 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

3 drops at skb_queue_purge+18 (0xffffffffb0412258)

1 drops at ip6_mc_input+1e6 (0xffffffffb05608f6)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

5 drops at ip_rcv+11b (0xffffffffb04cb47b)

7 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

16 drops at skb_queue_purge+18 (0xffffffffb0412258)

8 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

1 drops at ip6_mc_input+1e6 (0xffffffffb05608f6)

2 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

1 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

1 drops at tcp_validate_incoming+fc (0xffffffffb04e77fc)

11 drops at ip_rcv+11b (0xffffffffb04cb47b)

1 drops at sk_stream_kill_queues+50 (0xffffffffb041a500)

2 drops at ip_rcv_finish+212 (0xffffffffb04cad02)

1 drops at tcp_v4_do_rcv+70 (0xffffffffb04f6510)

60 drops at tcp_v4_rcv+81 (0xffffffffb04f7f41)

2 drops at __init_scratch_end+d4f6a99 (0xffffffffc0af6a99)

2 drops at skb_queue_purge+18 (0xffffffffb0412258)

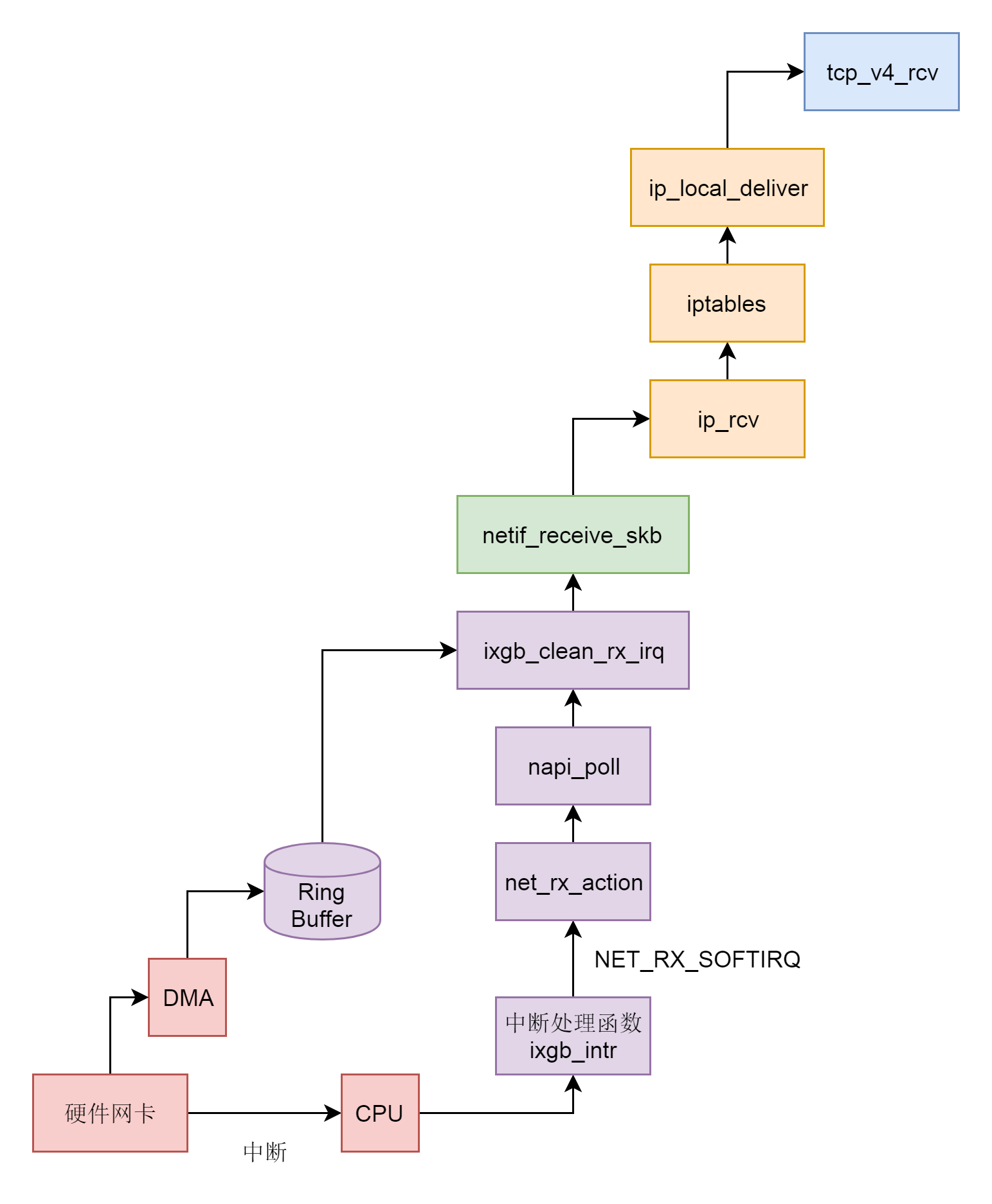

找到大量丢包的点为 tcp_v4_rcv 函数,iptables 即 netfilter 中并未发生丢包。

查看 tcp_v4_rcv 函数源码:

int tcp_v4_rcv(struct sk_buff *skb)

{

// a lot of code here

if (!xfrm4_policy_check(sk, XFRM_POLICY_IN, skb))

goto discard_and_relse;

}

还有 xfrm4_policy_check 函数本体:

int __xfrm_policy_check(struct sock *sk, int dir, struct sk_buff *skb,

unsigned short family)

{

// a lot of code here

if (!pol) {

if (skb->sp && secpath_has_nontransport(skb->sp, 0, &xerr_idx)) {

xfrm_secpath_reject(xerr_idx, skb, &fl);

XFRM_INC_STATS(net, LINUX_MIB_XFRMINNOPOLS);

return 0;

}

return 1;

}

}

xfrm4_policy_check检查 xfrm policy,找不到 policy,并更新 /proc/net/xfrm_stat 文件,因此我们观察到 XfrmInNoPols 计数不断增长- 进入

discard_and_relse,丢包

在来到 xfrm_policy_check 之前,已经是被 IPVS SNAT(过去 DNAT,回来就是 SNAT)后的地址了,TCP 包的源 IP 被改回 Cluster IP(Service 的 VIP)。

$ ip xfrm policy

# ...

src 10.233.68.0/24 dst 10.233.64.0/24

dir fwd priority 1757392 ptype main

tmpl src 172.20.150.183 dst 172.20.163.65

proto esp reqid 16421 mode tunnel

# ...

我们在 yurt-cloud 节点上手动添加一条 XFRM policy,src 指定为 Cluster IP CIDR:10.255.0.0/18,dst 指定为 Pod IP CIDR:10.233.0.0/16,使回包源 IP 与目的 IP 均在其范围内:

$ ip xfrm policy add src 10.255.0.0/18 dst 10.233.0.0/16 dir in ptype main action allow priority 1757392 tmpl src 172.20.150.183 dst 172.20.163.65 proto esp mode tunnel reqid 16421

$ ip xfrm policy

src 10.255.0.0/18 dst 10.233.0.0/16

dir in priority 1757392 ptype main

tmpl src 172.20.150.183 dst 172.20.163.65

proto esp reqid 16421 mode tunnel

# ...

src 10.233.68.0/24 dst 10.233.64.0/24

dir fwd priority 1757392 ptype main

tmpl src 172.20.150.183 dst 172.20.163.65

proto esp reqid 16421 mode tunnel

# ...

在加了上述规则后,便可以在 host 网络中通过 Service 的 Cluster IP 跨物理区域访问 Pod 中的服务了。

扩展

另外,即使无上述规则,落在 yurt-cloud 节点上的 Pod 中也是可以通过 Service 的 Cluster IP 跨物理区域访问服务的,这是因为回包进入 Pod 的网络命名空间后不存在 XFRM 规则校验。

还有基于 iptables 的 Service 也没有问题。

ipvs 情况下 xfrm_policy_check -> nf_nat_decode_session 取到的 flow 信息与 iptables 的不同:

- iptables 中能取到原始 flow 的源 IP 和目的 IP,然后

xfrm_policy_lookup就能找到 OpenYurt Raven 设置的规则 - ipvs 中取到的 flow 信息只有目的 IP 是对的,但是源 IP 不对,在后续的

xfrm_policy_lookup中就找不到规则

nf_nat_decode_session 的实现 nf_nat_ipv4_decode_session 中去填充 flow 是按照 conntrack 的 status 来填充的,状态位中有标记当前这个 conntrack 是否做了 SNAT 和 DNAT:

enum ip_conntrack_status {

/* Connection needs src nat in orig dir. This bit never changed. */

IPS_SRC_NAT_BIT = 4,

IPS_SRC_NAT = (1 << IPS_SRC_NAT_BIT),

/* Connection needs dst nat in orig dir. This bit never changed. */

IPS_DST_NAT_BIT = 5,

IPS_DST_NAT = (1 << IPS_DST_NAT_BIT),

/* Both together. */

IPS_NAT_MASK = (IPS_DST_NAT | IPS_SRC_NAT),

};

static void nf_nat_ipv4_decode_session(struct sk_buff *skb,

const struct nf_conn *ct,

enum ip_conntrack_dir dir,

unsigned long statusbit,

struct flowi *fl)

{

// a lot of code here

statusbit ^= IPS_NAT_MASK;

if (ct->status & statusbit) {

fl4->saddr = t->src.u3.ip;

if (t->dst.protonum == IPPROTO_TCP ||

t->dst.protonum == IPPROTO_UDP ||

t->dst.protonum == IPPROTO_UDPLITE ||

t->dst.protonum == IPPROTO_DCCP ||

t->dst.protonum == IPPROTO_SCTP)

fl4->fl4_sport = t->src.u.all;

}

}

- iptables 模式中收到包 skb 的 conntrack status 是 0x1ba,包含 SNAT(0x10) 和 DNAT(0x20)

- ipvs 模式中收到的包 skb 的 conntrack status 是 0x19a,只包含了 DNAT(0x10),不包含 DNAT(0x20)

结论

这是 OpenYurt Raven 存在的 bug,考虑在 kube-proxy 为 IPVS 模式时,Raven 额外配置一条 XFRM 规则。为阿里的同学们配合排查点个赞,后续将由我修复。