计量、追踪和日志

Feb 2, 2022 22:00 · 1941 words · 4 minute read

在 2017 年的分布式追踪峰会(2017 Distributed Tracing Summit)结束后,Peter Bourgon 撰写了总结文章《Metrics, Tracing, and Logging》,系统地阐述了这三者的定义、特征,以及它们之间的关系与差异,受到了业界的广泛认可。

译文

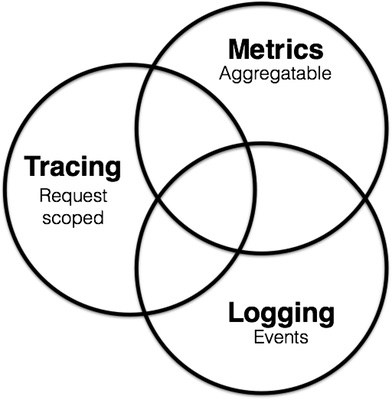

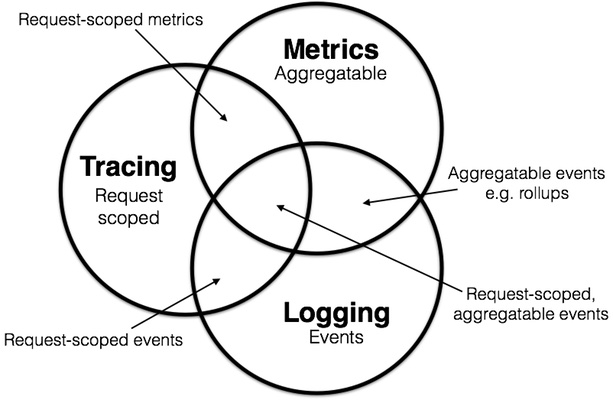

简而言之,我觉得咱们在共同的词汇上有些歧义。我想着也许可以把监控领域或者说是可观察性以韦恩图的形式展现出来。计量、追踪和日志都是更宽泛的画面的一部分,而且在某些情况下肯定会重叠,但我想找出每一项真正的特性。

计量(metrics) 的决定性特征在于它们是可聚合(aggregatable)的:它们都是在一段时间内组成轨距(gauge)、计数器(counter)或直方图(histogram)的最小(原子)单位。举几个栗子:一个队列的当前深度可被建模为一段轨距,以最后写入的语义聚合更新;过来的 HTTP 请求数量可被建模为一个计数器,以简单的加法聚合更新;一条请求的持续时间可被建模为一个直方图,以时间组聚合更新并产生统计。

日志(logging) 的决定性特征是它处理离散的事件。举几个栗子:向 Elasticsearch 发送应用程序的调试或错误信息;通过 Kafka 向 BigTable 等数据湖推送审计/跟踪事件;或从服务调用中提取请求特定的元数据并发送给 NewRelic 等错误追踪服务。

追踪(tracing) 的唯一决定性特征是,它处理的是请求范围(request-scoped)的信息。任何数据或元数据都可以与系统中单个事务性对象的生命周期绑定。举几个栗子:RPC 远程服务的持续时间;发送到数据库的真实 SQL 查询语句文本;或者一个 HTTP 请求的相关 ID。

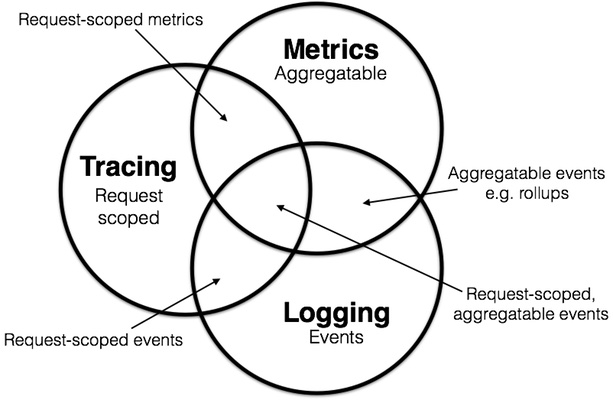

有了这些定义,我们就可以给重叠的部分贴上标签了。

当然很多典型云原生应用程序的组件最终都是请求范围(request-scoped)的,因此在更广泛的追踪上下文下谈论这些可能有意义。但我们现在能够观察到,并非所有的组件都与请求的生命周期相关:例如逻辑组件的诊断信息,或处理的生命周期细节,都是与任何离散的请求正交(orthogonal)的。因此不是所有的计量或日志都可以塞进追踪系统中——至少啥都不做。或者我们可能意识到在应用程序中直接使用计量会带来巨大的收益;相反地,将计量塞进日志管道弊大于利。

从此我们可以开始对现有的系统进行分类。例如 Prometheus 从一个计量系统起家,随着时间的推移它可能会向追踪的方向发展,从而涉足请求返回的计量,但不太可能会深入到日志的集合。ELK 提供了日志和时间轴,将其置于可聚合的事件集合,但似乎它也在其他领域不断累积了更多的功能,将它推向中心。

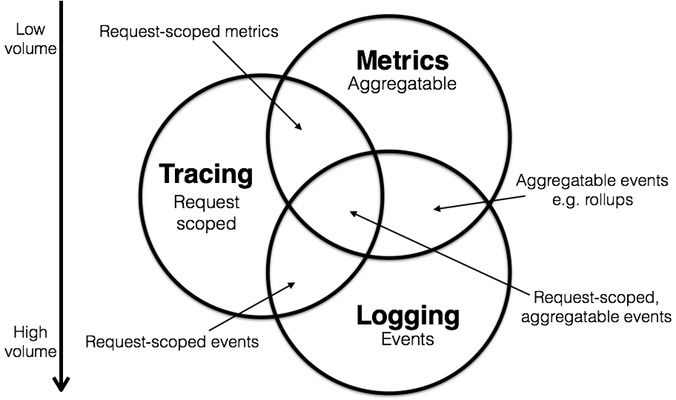

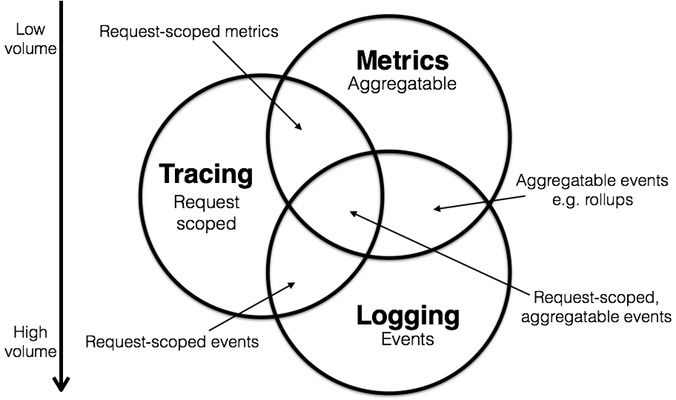

此外,我注意到一个奇怪的细节,作为这种可视化的副作用。在这三个领域中,计量往往需要最少的资源来管理,因为就其性质而言它们“压缩”得很好。相反日志往往体量巨大,经常超过产生它的生产流量。所以我们可以画一个开销的梯度图,从计量(低)到日志(高)——追踪可能位于中间某处。

原文

Today I had the good fortune of attending the 2017 Distributed Tracing Summit, with lots of rad folks from orgs like AWS/X-Ray, OpenZipkin, OpenTracing, Instana, Datadog, Librato, and many others I regret that I’m forgetting. At one point the discussion took a turn toward project scope and definitions. Should a tracing system also manage logging? What indeed is logging, when viewed through the different lenses represented in the room? And where do all of the various concrete systems fit in to the picture?

In short, I felt that we were stumbling a little bit around a shared vocabulary. I thought we could probably map out the domain of instrumentation, or observability, as a sort of Venn diagram. Metrics, tracing, and logging are definitely all parts of a broader picture, and can definitely overlap in some circumstances, but I wanted to try and identify the properties of each that were truly distinct. I had a think over a coffee break and came up with this.

I think that the defining characteristic of metrics is that they are aggregatable: they are the atoms that compose into a single logical gauge, counter, or histogram over a span of time. As examples: the current depth of a queue could be modeled as a gauge, whose updates aggregate with last-writer-win semantics; the number of incoming HTTP requests could be modeled as a counter, whose updates aggregate by simple addition; and the observed duration of a request could be modeled into a histogram, whose updates aggregate into time-buckets and yield statistical summaries.

I think that the defining characteristic of logging is that it deals with discrete events. As examples: application debug or error messages emitted via a rotated file descriptor through syslog to Elasticsearch (or OK Log, nudge nudge); audit-trail events pushed through Kafka to a data lake like BigTable; or request-specific metadata pulled from a service call and sent to an error tracking service like NewRelic.

I think that the single defining characteristic of tracing, then, is that it deals with information that is request-scoped. Any bit of data or metadata that can be bound to lifecycle of a single transactional object in the system. As examples: the duration of an outbound RPC to a remote service; the text of an actual SQL query sent to a database; or the correlation ID of an inbound HTTP request.

With these definitions we can label the overlapping sections.

Certainly a lot of the instrumentation typical to cloud-native applications will end up being request-scoped, and thus may make sense to talk about in a broader context of tracing. But we can now observe that not all instrumentation is bound to request lifecycles: there will be e.g. logical component diagnostic information, or process lifecycle details, that are orthogonal to any discrete request. So not all metrics or logs, for example, can be shoehorned into a tracing system—at least, not without some work. Or, we might realize that instrumenting metrics directly in our application gives us powerful benefits, like a flexible expression language that evaluates on a real-time view of our fleet; in contrast, shoehorning metrics into a logging pipeline may force us to abandon some of those advantages.

From here we can begin to categorize existing systems. Prometheus, for example, started off exclusively as a metrics system, and over time may grow towards tracing and thus into request-scoped metrics, but likely will not move too deeply into the logging space. ELK offers logging and roll-ups, placing it firmly the aggregatable events space, but seems to continuously accrue more features in the other domains, pushing it toward the center.

Further, I observed a curious operational detail as a side-effect of this visualization. Of the three domains, metrics tend to require the fewest resources to manage, as by their nature they “compress” pretty well. Conversely, logging tends to be overwhelming, frequently coming to surpass in volume the production traffic it reports on. (I wrote a bit more on this topic previously.) So we can draw a sort of volume or operational overhead gradient, from metrics (low) to logging (high)—and we observe that tracing probably sits somewhere in the middle.

Maybe it’s not a perfect description of the space, but my sense from the Summit attendees was that this categorization made sense: we were able to speak more productively when it was clear what we were talking about. Maybe this diagram can be useful to you, too, if you’re trying to get a handle on the product space, or clarify conversations in your own organizations.