KubeVirt 架构

Dec 1, 2022 13:30 · 2715 words · 6 minute read

KubeVirt 项目利用 Kubernetes 声明式 API 的方式创建虚拟机实例:

kind: VirtualMachine

metadata:

name: ecs-s4-1

namespace: wq-test

spec:

running: true

template:

metadata:

annotations:

ovn.kubernetes.io/allow_live_migration: "true"

labels:

kubevirt.io/vm: ecs-s4-1

spec:

domain:

cpu:

cores: 1

sockets: 4

threads: 1

devices:

disks:

- bootOrder: 1

disk:

bus: virtio

name: bootdisk

- disk:

bus: virtio

name: cloudinitdisk

interfaces:

- bridge: {}

name: attachnet1

- bridge: {}

name: attachnet2

machine:

type: q35

memory:

guest: 8Gi

resources:

requests:

cpu: 2

memory: 2Gi

hostname: ecs-s4-1

networks:

- multus:

networkName: mec-nets/attachnet1

name: attachnet1

- multus:

networkName: mec-nets/attachnet2

name: attachnet2

volumes:

- name: bootdisk

persistentVolumeClaim:

claimName: ecs-s4-1-bootpvc-4rbd2e

- cloudInitNoCloud:

userData: |-

#cloud-config

password: fedora

ssh_pwauth: True

chpasswd: { expire: False }

name: cloudinitdisk

通过 CRD VirtualMachine 的 spec.template 字段定义期望的虚机实例,最终虚机(qemu 进程)会在 Pod(容器)内运行。

什么是 CRD:https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/custom-resources/

virt-operator

virt-operator 封装并简化了 KubeVirt 的部署:

# Point at latest release

$ export RELEASE=$(curl https://storage.googleapis.com/kubevirt-prow/release/kubevirt/kubevirt/stable.txt)

# Deploy the KubeVirt operator

$ kubectl apply -f https://github.com/kubevirt/kubevirt/releases/download/${RELEASE}/kubevirt-operator.yaml

KubeVirt、VirtualMachine、VirtualMachineInstance 等等都是 KubeVirt 项目的 CRD:

$ kubectl get crd | grep kubevirt.io

cdiconfigs.cdi.kubevirt.io 2022-10-17T11:22:05Z

cdis.cdi.kubevirt.io 2022-09-27T09:21:14Z

dataimportcrons.cdi.kubevirt.io 2022-10-17T11:22:05Z

datasources.cdi.kubevirt.io 2022-10-17T11:22:05Z

datavolumes.cdi.kubevirt.io 2022-10-17T11:22:05Z

kubevirts.kubevirt.io 2022-09-27T09:21:19Z

migrationpolicies.migrations.kubevirt.io 2022-09-27T09:21:57Z

objecttransfers.cdi.kubevirt.io 2022-10-17T11:22:05Z

storageprofiles.cdi.kubevirt.io 2022-10-17T11:22:05Z

virtualmachineclusterflavors.flavor.kubevirt.io 2022-09-27T09:21:56Z

virtualmachineflavors.flavor.kubevirt.io 2022-09-27T09:21:56Z

virtualmachineinstancemigrations.kubevirt.io 2022-09-27T09:21:56Z

virtualmachineinstancepresets.kubevirt.io 2022-09-27T09:21:56Z

virtualmachineinstancereplicasets.kubevirt.io 2022-09-27T09:21:56Z

virtualmachineinstances.kubevirt.io 2022-09-27T09:21:56Z

virtualmachinepools.pool.kubevirt.io 2022-09-27T09:21:56Z

virtualmachinerestores.snapshot.kubevirt.io 2022-09-27T09:21:56Z

virtualmachines.kubevirt.io 2022-09-27T08:02:37Z

virtualmachinesnapshotcontents.snapshot.kubevirt.io 2022-09-27T09:21:56Z

virtualmachinesnapshots.snapshot.kubevirt.io 2022-09-27T09:21:56Z

virt-operator 控制器负责监听 KubeVirt CR 对象事件,相应地部署/删除 KubeVirt 相关组件:

# Point at latest release

$ export RELEASE=$(curl https://storage.googleapis.com/kubevirt-prow/release/kubevirt/kubevirt/stable.txt)

# Create the KubeVirt CR (instance deployment request) which triggers the actual installation

$ kubectl apply -f https://github.com/kubevirt/kubevirt/releases/download/${RELEASE}/kubevirt-cr.yaml

# wait until all KubeVirt components are up

$ kubectl -n kubevirt wait kv kubevirt --for condition=Available

$ kubectl get po -n kubevirt

NAME READY STATUS RESTARTS AGE

virt-api-69d85f4c68-4lk56 1/1 Running 0 2d2h

virt-api-69d85f4c68-r6h8m 1/1 Running 0 2d2h

virt-controller-6d8b7ddfb4-f6s4q 1/1 Running 0 2d2h

virt-controller-6d8b7ddfb4-sm28q 1/1 Running 0 2d2h

virt-handler-7vslm 2/2 Running 0 2d2h

virt-handler-kq2zg 2/2 Running 0 2d2h

virt-handler-qtwrb 2/2 Running 0 2d2h

virt-operator-f9cc84bb5-frrl2 1/1 Running 0 2d2h

virt-operator-f9cc84bb5-z9249 1/1 Running 0 2d2h

KubeVirt 架构(不算 virt-operator)如图:

-

virt-api - 以 Deployment 形式部署

$ kubectl get deployment virt-api -n kubevirt NAME READY UP-TO-DATE AVAILABLE AGE virt-api 2/2 2 2 61d -

virt-controller - 以 Deployment 形式部署

$ kubectl get deployment virt-controller -n kubevirt NAME READY UP-TO-DATE AVAILABLE AGE virt-controller 2/2 2 2 61d -

virt-handler - 以 DaemonSet 形式部署

$ kubectl get ds virt-handler -n kubevirt NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE virt-handler 3 3 3 3 3 kubernetes.io/os=linux 61d

如何部署 KubeVirt:https://kubevirt.io/user-guide/operations/installation/

virt-api

virt-api 是 KubeVirt 的 apiserver,集 Dynamic Admission Control 和扩展 API server 于一身:

-

Mutating Admission Webhook:当请求(比如创建 VirtualMachine)来到 k8s apiserver 后,先转发至 virt-api 按业务需求篡改资源后再由 apiserver 持久化至 etcd。

通过 mutatingwebhookconfiguration 将 virt-api 配置为 Mutating Admission Webhook

$ kubectl get mutatingwebhookconfiguration | grep virt virt-api-mutator 3 61d -

Validating Admission Webhook:当请求(比如创建 VirtualMachine)来到 k8s apiserver 后,转发至 virt-api 校验资源定义是否合法,只有通过校验的对象才被 apiserver 持久化至 etcd;无效的资源会被拒绝。

通过 validatingwebhookconfiguration 将 virt-api 配置为 Validating Admission Webhook

$ kubectl get validatingwebhookconfiguration | grep virt virt-api-validator 15 61d virt-operator-validator 2 61d -

扩展 API server:编写自定义 apiserver 来扩展 k8s apiserver(而非替换)来实现业务需求。 KubeVirt 通过该功能实现了软重启、挂起/恢复虚机。

通过 APIservice 对象将 virt-api 扩展至 k8s apiserver

$ kubectl get apiservice | grep kubevirt/virt-api v1.subresources.kubevirt.io kubevirt/virt-api True 61d v1alpha3.subresources.kubevirt.io kubevirt/virt-api True 61d

virt-controller

创建完 VirtualMachine 对象后,VirtualMachineInstance 和 virt-launcher Pod 会相继被 virt-controller 控制器创建出来:

VirtualMachine -> VirtualMachineInstance -> Pod

$ kubectl get vm ecs-s4-1 -n wq-test

NAME AGE STATUS READY

ecs-s4-1 12d Running True

$ kubectl get vmi ecs-s4-1 -n wq-test

NAME AGE PHASE IP NODENAME READY

ecs-s4-1 3d15h Running 172.18.91.229 node165 True

$ kubectl get pod -n wq-test | grep ecs-s4-1

virt-launcher-ecs-s4-1-fdbkg 1/1 Running 0 3d15h

通过 OwnerReference 来追溯资源的父子关系:

$ kubectl get po virt-launcher-ecs-s4-1-fdbkg -n wq-test -o jsonpath='{.metadata.ownerReferences}' | jq

[

{

"apiVersion": "kubevirt.io/v1",

"blockOwnerDeletion": true,

"controller": true,

"kind": "VirtualMachineInstance",

"name": "ecs-s4-1",

"uid": "2b33f85a-5752-4bd0-aadb-2dc06be33772"

}

]

$ kubectl get vmi ecs-s4-1 -n wq-test -o jsonpath='{.metadata.ownerReferences}' | jq

[

{

"apiVersion": "kubevirt.io/v1",

"blockOwnerDeletion": true,

"controller": true,

"kind": "VirtualMachine",

"name": "ecs-s4-1",

"uid": "d61db8e3-060e-4f44-bf76-ec76b2be8831"

}

]

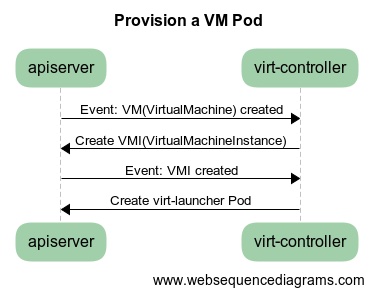

virt-controller 在用户创建 VirtualMachine 对象后与 k8s apiserver 的交互:

virt-controller 本质上也是一组控制器,监听并处理 KubeVirt 的 VirtualMachine、VirtualMachineInstance 等对象的事件:

- 监听到 VirtualMachine(VM) 对象的创建,并根据其中的 VMI 模板

.spec.template创建 VirtualMachineInstance(VMI) 对象 - 监听到 VirtualMachineInstance(VMI) 对象的创建事件,将 VMI 转换为 virt-launcher Pod 并创建

virt-launcher

在 KubeVirt 中虚机实例也就是 qemu 进程运行在 Pod(容器)内,如此就可以利用 Kubernetes 原生的 kube-scheduler 调度器来统一进行调度(在 OpenStack 中则由 Nova 调度实例)。

由 virt-controller 转换 VMI 对象定义创建出来的 virt-launcher Pod 定义:

apiVersion: v1

kind: Pod

metadata:

annotations:

# a lot of annotations here

name: virt-launcher-ecs-s4-1-fdbkg

namespace: wq-test

ownerReferences:

- apiVersion: kubevirt.io/v1

blockOwnerDeletion: true

controller: true

kind: VirtualMachineInstance

name: ecs-s4-1

uid: 2b33f85a-5752-4bd0-aadb-2dc06be33772

resourceVersion: "34337393"

uid: bba486d7-2d7f-4fe9-bb66-9e01a75405f1

spec:

automountServiceAccountToken: true

containers:

- command:

- /usr/bin/virt-launcher

- --qemu-timeout

- 308s

- --name

- ecs-s4-1

- --uid

- 2b33f85a-5752-4bd0-aadb-2dc06be33772

- --namespace

- wq-test

- --kubevirt-share-dir

- /var/run/kubevirt

- --ephemeral-disk-dir

- /var/run/kubevirt-ephemeral-disks

- --container-disk-dir

- /var/run/kubevirt/container-disks

- --grace-period-seconds

- "45"

- --hook-sidecars

- "0"

- --ovmf-path

- /usr/share/OVMF

env:

- name: KUBEVIRT_RESOURCE_NAME_attachnet2

- name: KUBEVIRT_RESOURCE_NAME_attachnet1

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

image: registry-1.ict-mec.net:18443/kubevirt/virt-launcher:release-0.51-ecs-57fe227f5-20220920081906

imagePullPolicy: IfNotPresent

name: compute

resources:

limits:

devices.kubevirt.io/kvm: "1"

devices.kubevirt.io/tun: "1"

devices.kubevirt.io/vhost-net: "1"

requests:

cpu: "2"

devices.kubevirt.io/kvm: "1"

devices.kubevirt.io/tun: "1"

devices.kubevirt.io/vhost-net: "1"

ephemeral-storage: 50M

memory: 2246Mi

virt-controller 将 VM 中的虚机实例定义转换为 Pod 的 .spec.resources。调度的本质就是计算应用程序所需资源,挑选出最合适的节点,在上面启动应用程序。OpenStack Nova 和 kube-scheduler 的职能类似。

virt-launcher 容器中所有进程:

$ kubectl exec -it virt-launcher-ecs-s4-1-fdbkg -n wq-test -- ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 Nov23 ? 00:01:29 /usr/bin/virt-launcher --qemu-timeout 308s --name ecs-s4-1 --uid 2b33f85a-5752-4bd0-aadb-2dc06be33772 --namespace wq-test --kubevirt-share-dir /var/run/kubevirt -

root 25 1 0 Nov23 ? 00:08:28 /usr/bin/virt-launcher --qemu-timeout 308s --name ecs-s4-1 --uid 2b33f85a-5752-4bd0-aadb-2dc06be33772 --namespace wq-test --kubevirt-share-dir /var/run/kubevirt -

root 46 25 0 Nov23 ? 00:01:02 /usr/sbin/virtlogd -f /etc/libvirt/virtlogd.conf

root 47 25 0 Nov23 ? 00:04:50 /usr/sbin/libvirtd -f /var/run/libvirt/libvirtd.conf

qemu 100 1 0 Nov23 ? 00:40:42 /usr/libexec/qemu-kvm -name guest=wq-test_ecs-s4-1,debug-threads=on -S -object {"qom-type":"secret","id":"masterKey0","format":"raw","file":"/var/lib/libvirt/qemu

virt-launcher -> libvirtd -> qemu

virt-launcher 是虚机实例所在容器的 1 号进程。同 OpenStack,KubeVirt 使用 libvirt(与 Docker 类似的 CS 架构)来管理虚机实例(qemu 进程)的生命周期,而非直接接触 qemu 进程(KataContainer 项目)。

- virt-launcher 拉起 libvirtd 进程,作为虚机管理服务;virt-launcher 进程通过 unix socket 与 libvirtd 网络通信来调用 libvirt API

- 做一些初始化工作

- 根据 virt-handler 发送的启动信号,调用 libvirt API 创建并启动虚机实例

- 与 GuestOS 中的 qemu-guest-agent 建立连接,获取部分 GuestOS 信息更新至 VMI 状态中,并监听对实例的操作事件(变更密码等)

KubeVirt 如何将 k8s PVC 作为云硬盘(卷)

观察 virt-launcher Pod 的卷定义:

apiVersion: v1

kind: Pod

metadata:

annotations:

# a lot of annotations here

name: virt-launcher-ecs-s4-1-fdbkg

namespace: wq-test

spec:

containers:

volumeDevices:

- devicePath: /dev/bootdisk

name: bootdisk

volumes:

persistentVolumeClaim:

claimName: ecs-s4-1-bootpvc-4rbd2e

而启动卷 PVC ecs-s4-1-bootpvc-4rbd2e 中已经提前写入了虚机 raw 镜像(注意这里的 PVC 是 Block 模式的)。

$ kubectl get pvc ecs-s4-1-bootpvc-4rbd2e -n wq-test -o jsonpath='{.spec.volumeMode}'

Block

$ kubectl exec -it virt-launcher-ecs-s4-1-fdbkg -n wq-test -- ls -al /dev/bootdisk

brw-rw---- 1 qemu qemu 252, 304 Nov 28 13:44 /dev/bootdisk # b 表示该“文件”代表块设备

qemu 进程通过读写块设备模拟磁盘 I/O:

$ kubectl exec -it virt-launcher-ecs-s4-1-fdbkg -n wq-test -- cat /proc/100/cmdline

/usr/libexec/qemu-kvm-nameguest=wq-test_ecs-s4-1,debug-threads=on-S-object{"qom-type":"secret","id":"masterKey0","format":"raw","file":"/var/lib/libvirt/qemu/domain-1-wq-test_ecs-s4-1/master-key.aes"}-machinepc-q35-rhel8.5.0,accel=kvm,usb=off,dump-guest-core=off-cpuIvyBridge-IBRS,ss=on,vmx=on,pdcm=on,pcid=on,hypervisor=on,arat=on,tsc-adjust=on,umip=on,md-clear=on,stibp=on,arch-capabilities=on,ssbd=on,xsaveopt=on,pdpe1gb=on,ibpb=on,ibrs=on,amd-stibp=on,amd-ssbd=on,skip-l1dfl-vmentry=on,pschange-mc-no=on-msize=8388608k,slots=16,maxmem=134217728k-overcommitmem-lock=off-smp4,maxcpus=128,sockets=128,dies=1,cores=1,threads=1-object{"qom-type":"iothread","id":"iothread1"}-object{"qom-type":"memory-backend-ram","id":"ram-node0","size":8589934592}-numanode,nodeid=0,cpus=0-127,memdev=ram-node0-uuid851e7efa-9740-58d8-b321-179249b9b17e-smbiostype=1,manufacturer=KubeVirt,product=None,uuid=851e7efa-9740-58d8-b321-179249b9b17e,family=KubeVirt-no-user-config-nodefaults-chardevsocket,id=charmonitor,fd=20,server=on,wait=off-monchardev=charmonitor,id=monitor,mode=control-rtcbase=localtime-no-shutdown-bootstrict=on-devicepcie-root-port,port=0x18,chassis=8,id=pci.8,bus=pcie.0,multifunction=on,addr=0x3-devicepcie-root-port,port=0x19,chassis=9,id=pci.9,bus=pcie.0,addr=0x3.0x1-devicepcie-root-port,port=0x1a,chassis=10,id=pci.10,bus=pcie.0,addr=0x3.0x2-devicepcie-root-port,port=0x1b,chassis=11,id=pci.11,bus=pcie.0,addr=0x3.0x3-devicepcie-root-port,port=0x1c,chassis=12,id=pci.12,bus=pcie.0,addr=0x3.0x4-devicepcie-root-port,port=0x10,chassis=1,id=pci.1,bus=pcie.0,multifunction=on,addr=0x2-devicepcie-root-port,port=0x11,chassis=2,id=pci.2,bus=pcie.0,addr=0x2.0x1-devicepcie-root-port,port=0x12,chassis=3,id=pci.3,bus=pcie.0,addr=0x2.0x2-devicepcie-root-port,port=0x13,chassis=4,id=pci.4,bus=pcie.0,addr=0x2.0x3-devicepcie-root-port,port=0x14,chassis=5,id=pci.5,bus=pcie.0,addr=0x2.0x4-devicepcie-root-port,port=0x15,chassis=6,id=pci.6,bus=pcie.0,addr=0x2.0x5-devicepcie-root-port,port=0x16,chassis=7,id=pci.7,bus=pcie.0,addr=0x2.0x6-devicevirtio-scsi-pci-non-transitional,id=scsi0,bus=pci.3,addr=0x0-devicevirtio-serial-pci-non-transitional,id=virtio-serial0,bus=pci.4,addr=0x0-blockdev{"driver":"host_device","filename":"/dev/bootdisk","aio":"native","node-name":"libvirt-2-storage","cache":{"direct":true,"no-flush":false},"auto-read-only":true,"discard":"unmap"}-blockdev{"node-name":"libvirt-2-format","read-only":false,"discard":"unmap","cache":{"direct":true,"no-flush":false},"driver":"raw","file":"libvirt-2-storage"}-devicevirtio-blk-pci-non-transitional,bus=pci.5,addr=0x0,drive=libvirt-2-format,id=ua-bootdisk,bootindex=1,write-cache=on,werror=stop,rerror=stop-blockdev{"driver":"file","filename":"/var/run/kubevirt-ephemeral-disks/cloud-init-data/wq-test/ecs-s4-1/noCloud.iso","node-name":"libvirt-1-storage","cache":{"direct":true,"no-flush":false},"auto-read-only":true,"discard":"unmap"}-blockdev{"node-name":"libvirt-1-format","read-only":false,"discard":"unmap","cache":{"direct":true,"no-flush":false},"driver":"raw","file":"libvirt-1-storage"}-devicevirtio-blk-pci-non-transitional,bus=pci.6,addr=0x0,drive=libvirt-1-format,id=ua-cloudinitdisk,write-cache=on,werror=stop,rerror=stop-netdevtap,fd=22,id=hostua-attachnet1,vhost=on,vhostfd=23-devicevirtio-net-pci-non-transitional,host_mtu=1500,netdev=hostua-attachnet1,id=ua-attachnet1,mac=00:00:00:28:54:77,bus=pci.1,addr=0x0,romfile=-netdevtap,fd=24,id=hostua-attachnet2,vhost=on,vhostfd=25-devicevirtio-net-pci-non-transitional,host_mtu=1500,netdev=hostua-attachnet2,id=ua-attachnet2,mac=00:00:00:be:63:8e,bus=pci.2,addr=0x0,romfile=-chardevsocket,id=charserial0,fd=26,server=on,wait=off-deviceisa-serial,chardev=charserial0,id=serial0-chardevsocket,id=charchannel0,fd=27,server=on,wait=off-devicevirtserialport,bus=virtio-serial0.0,nr=1,chardev=charchannel0,id=channel0,name=org.qemu.guest_agent.0-audiodevid=audio1,driver=none-vncvnc=unix:/var/run/kubevirt-private/2b33f85a-5752-4bd0-aadb-2dc06be33772/virt-vnc,audiodev=audio1-deviceVGA,id=video0,vgamem_mb=16,bus=pcie.0,addr=0x1-devicevirtio-balloon-pci-non-transitional,id=balloon0,bus=pci.7,addr=0x0-sandboxon,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny-msgtimestamp=on

虚机如何与外部网络打通

qemu 进程在 virt-launcher Pod 中,因为 Kubernetes 集群网络全通的要求,所以 virt-launcher 与 qemu 进程所在的网络命名空间天然连通外部网络。

而 qemu 进程通过操作 tap 设备(由 virt-handler 创建)将网络通信转换为与宿主机字符设备之间的文件流:

$ kubectl exec -it virt-launcher-ecs-test3-9h5jg -n ns-demo -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: net1: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 96:12:a6:f0:83:ec brd ff:ff:ff:ff:ff:ff

inet 192.168.100.7/24 brd 192.168.100.255 scope global net1

valid_lft forever preferred_lft forever

3: k6t-net1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc noqueue state UP group default

link/ether 02:00:00:f3:33:48 brd ff:ff:ff:ff:ff:ff

inet 169.254.75.10/32 scope global k6t-net1

valid_lft forever preferred_lft forever

inet6 fe80::ff:fef3:3348/64 scope link

valid_lft forever preferred_lft forever

4: tap1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc fq_codel master k6t-net1 state UP group default qlen 1000

link/ether 46:7f:24:8d:6d:2f brd ff:ff:ff:ff:ff:ff

inet6 fe80::447f:24ff:fe8d:6d2f/64 scope link

valid_lft forever preferred_lft forever

$ kubectl exec -it virt-launcher-ecs-test3-9h5jg -n ns-demo -- virsh dumpxml 1

<domain type='kvm' id='1'>

<devices>

<interface type='ethernet'>

<mac address='00:00:00:bf:e0:70'/>

<target dev='tap1' managed='no'/>

<model type='virtio-non-transitional'/>

<mtu size='1400'/>

<alias name='ua-attachnet1'/>

<rom enabled='no'/>

<address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</interface>

</devices>

</domain>

tap 设备又通过 k6t-net1 网桥(由 virt-handler 创建)与容器网络的网卡(veth)挂接,进而连通虚机和外部网络。

virt-handler

virt-handler 以 DaemonSet 的形式部署,每节点都运行一个进程:

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: kubevirt

app.kubernetes.io/managed-by: virt-operator

app.kubernetes.io/version: release-0.51-ecs-366803fed-20221011072723

kubevirt.io: virt-handler

name: virt-handler

namespace: kubevirt

spec:

selector:

matchLabels:

kubevirt.io: virt-handler

template:

spec:

containers:

- command:

- virt-handler

- --port

- "8443"

- --hostname-override

- $(NODE_NAME)

- --pod-ip-address

- $(MY_POD_IP)

- --max-metric-requests

- "3"

- --console-server-port

- "8186"

- --graceful-shutdown-seconds

- "315"

- -v

- "2"

securityContext:

privileged: true

seLinuxOptions:

level: s0

本质上也是控制器,监听并处理 VirtualMachineInstance 等资源对象的事件:

-

创建 libvirt domain 启动所需的网络设备(网桥、taptun 设备)

-

初始化 virt-launcher Pod 中挂载的 FileSystem 模式的 PV(生成数据盘文件)

-

通知 virt-launcher 启动/重启/关闭实例

为什么需要 virt-handler 来给 virt-launcher 发送实例启动信号而不是直接由 virt-launcher 启动后就拉起实例? 因为只有 virt-handler 知道准备工作是否完成。。。

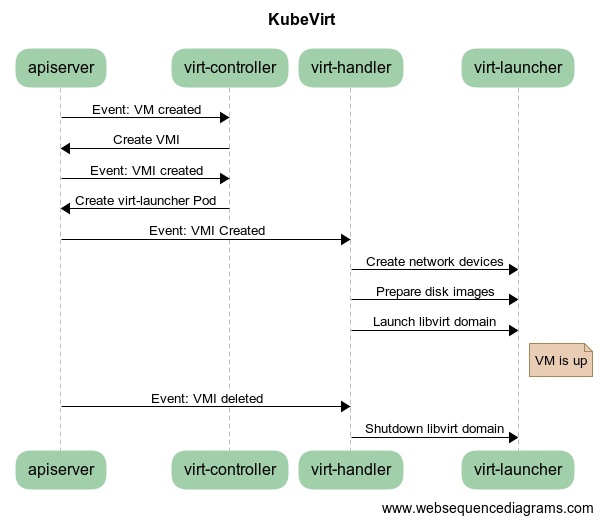

启动一个虚机实例的完整流程:

为什么需要 virt-handler 这个守护进程,而不是直接让 virt-launcher 准备并拉起实例? 因为 virt-launcher Pod 中的进程没有足够的权限,而 virt-handler Pod 则是 privileged 的容器,有权限修改其他容器网络命名空间中的网络栈。

KubeVirt 创建 VirtualMachineInstance 流程图: