容器、虚拟机和 Docker

Jan 19, 2019 12:20 · 3772 words · 8 minute read

如果你是一个程序员或技术人员,那你很可能听说过 Docker:在“容器”中打包、分发和运行应用程序的一款非常有用的工具。近来很难不引起大家的注意力——从开发者到系统管理员。即使像谷歌,VMware 和亚马逊这样的大公司都在构建支持它的服务。

不管你是否想要使用 Docker,我都认为了解一些“容器”的基本概念以及它与虚拟机的区别是很重要的。

我们首先了解什么是虚拟机和容器。

什么是“容器(container)”和“虚拟机(VM)”

容器和虚拟机的目的类似:隔离应用程序和它的依赖至一个可以在任何地方运行的独立单元。

此外,容器和虚拟机消除了对物理硬件的需求,从而在能耗和成本效率方面允许更有效地使用计算资源。

容器和虚拟机之间的主要区别在于它们的架构方法。

虚拟机(VM)

虚拟机本质上是对真实计算机的仿真,像真机一样执行程序。虚拟机使用 hypervisor 在物理机上允许。反过来,hypervisor 既可以在主机上运行,也可以在“裸机”上运行。

让我们理解下面的行话:

hypervisor 是运行虚拟机的软件,固件或硬件的中间层。hypervisor 本身在物理计算机上运行,称为“主机”。主机为虚拟机提供资源,包括内存(RAM)和中央处理器(CPU)。这些资源在虚拟机之间划分,可以根据需要分发。如果一个虚拟机运行的应用程序消耗更多的资源,你可能会给它分配更多的资源。

在主机上运行的虚拟机(同样使用 hypervisor)通常也称为“客机”。该客机包含应用程序以及运行它需要的所有东西(比如系统二进制文件和库)。它还带有自己的整个虚拟化硬件栈,包括虚拟化网络适配器,存储和 CPU——这意味着它还拥有自己的完整的客户操作系统。从内部来看,客机表现地就像有自己的专属资源一样。从外部看,我们知道它是一个虚拟机——共享主机提供的资源。

如上所述,客机既可以在一个主机的 hypervisor 也可以在裸机的 hypervisor 上运行。但是它们之间很不相同。

首先,主机虚拟化的 hypervisor 在主机的操作系统上运行。例如,运行 OSX 的计算机可以在这个操作系统上安装虚拟机(VirtualBox 或 VMware Workstation)。虚拟机不得不通过主机操作系统才能访问硬件。

主机虚拟化的 hypervisor 的好处在于底层硬件不那么重要了。主机的操作系统负责硬件驱动程序而不是 hypervisor 本身,因此被认为更兼容硬件。另一方面,硬件和 hypervisor 之间的附加层产生了更多的资源开销,以至于降低虚拟机的性能。

裸机虚拟化 hypervisor 环境通过直接安装和运行在主机的硬件上来解决性能问题。因为它直接与底层硬件交互,所以不需要运行主机操作系统。在这种情况下,作为操作系统第一个被安装在主机上的就是 hypervisor。与主机虚拟化的 hypervisor 不同,裸机版 hypervisor 有自己的硬件驱动程序,可以直接与每个组件交互,I/O,计算或者执行某些类操作系统的任务。结果是获得了更好的性能,扩展性和稳定性。这里的权衡是 hypervisor 预装的设备驱动程序数量有限,所以硬件兼容性也有限。

在讨论了 hypervisor 之后,你可能想知道为什么在虚拟机和主机之间需要额外的 hypervisor 层。

由于虚拟机拥有自己的操作系统,所以 hypervisor 扮演了一个重要的角色:为虚拟机提供了一个平台来管理和执行操作系统,允许虚拟机共享主机资源。

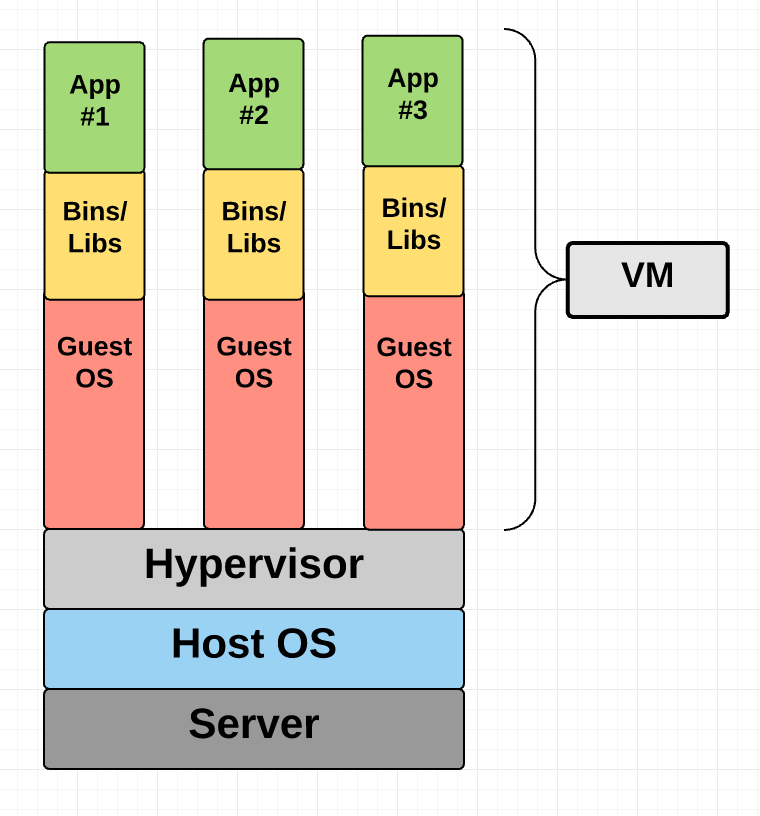

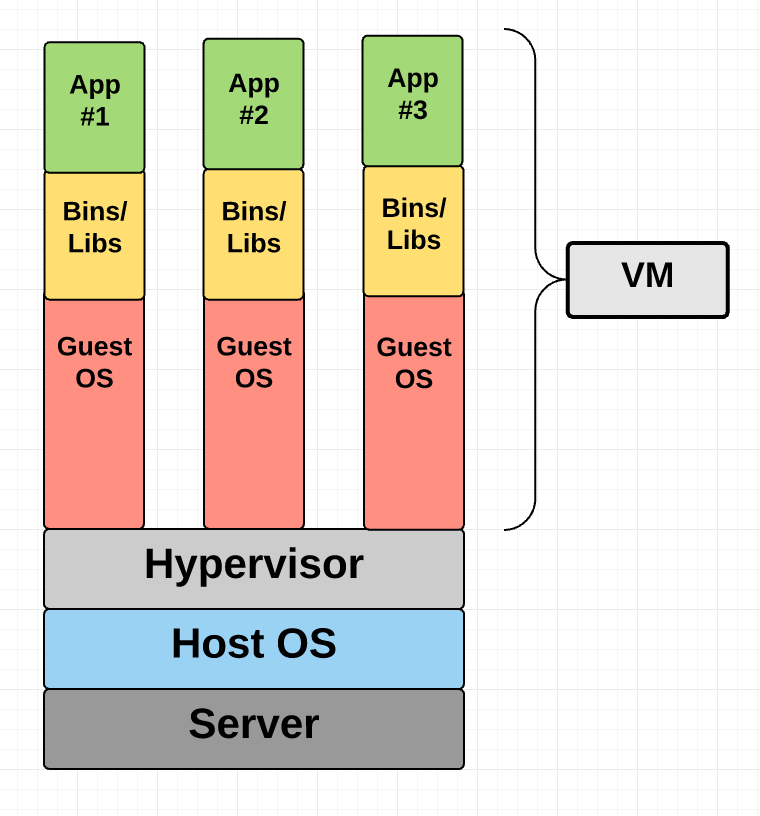

如图所示,虚拟机包含了虚拟硬件、内核(操作系统)和用户空间。

容器(container)

与硬件虚拟化的虚拟机不同,容器提供提供抽象的“用户空间”来提供操作系统级的虚拟化。

出于意图和目的,容器看起来像虚拟机。比如,它们具有私有空间来计算,可以 root 权限执行命令,有私有的网络接口和 IP 地址,允许自定义路由和 IP 规则表,可以挂在文件系统等。

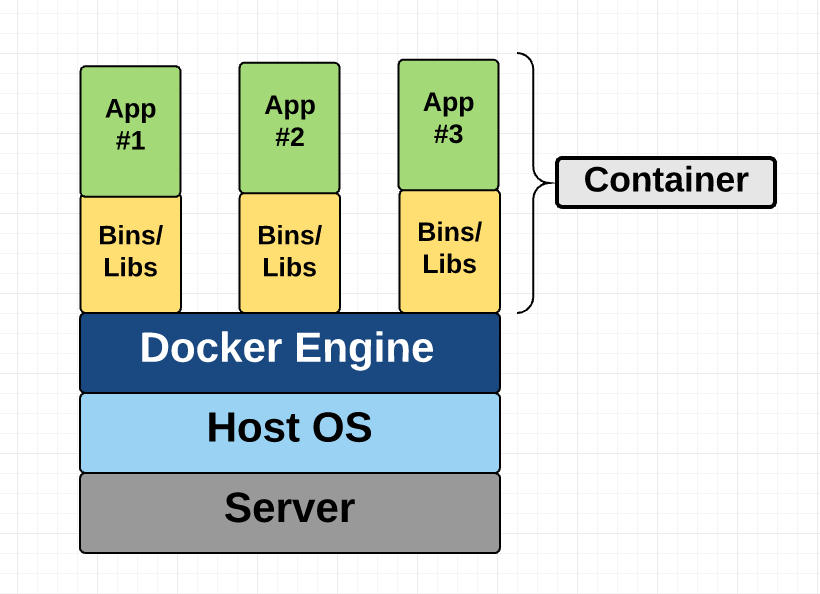

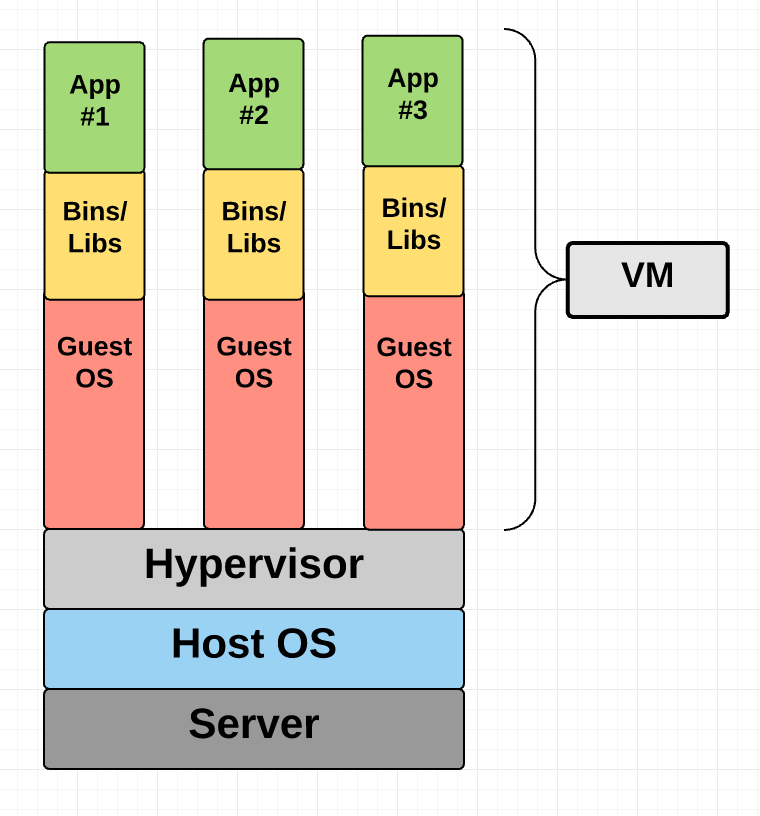

此图显示容器仅包含用户空间,而不是像虚拟机那样有内核或虚拟硬件。每个容器都有自己独立的用户空间以允许多个容器在单个主机上运行。我们可以看到容器们之间共享操作系统级架构。唯一需要抠出来创建的部分是 bins 和 libs。这就是容器如此轻量的原因。

Docker 从哪里来?

Docker 是一个基于 Linux 容器的开源项目。它使用 Linux 命名空间和控制组等内核功能在操作系统上创建容器。

容器远不是什么新事物;谷歌多年来一直在使用自己的容器技术。其他 Linux 容器技术包括Solaris Zones,BSD jails 和 LXC 早已存在多年。

那为什么 Docker 突然获得了成功?

- **易于使用:**Docker 使任何人,不论开发、系统管理员、架构师还是其他,更容易利用容器来快速构建和测试便携式应用程序。它允许任何人在自己的笔记本电脑上打包应用程序,而这些应用程序不做修改又可以在任何公共云,私有云甚至裸机上运行。这就是:“构建一次,到处运行”。

- **速度:**Docker 容器非常轻量,快速。由于容器只是在内核上运行的沙盒环境,因此它们占用更少的资源。你可以在几秒钟内创建并运行 Docker 容器,而虚拟机每次都不得不花更多的时间启动整个虚拟操作系统。

- **Docker Hub:**Docker 的用户从日益丰富的 Docker Hub 生态系统中受益,你可以将其看作“Docker 镜像的应用商店”。Docker Hub 拥有数万个社区创建的公共镜像,它们随时都可以使用。搜索想要的镜像令人难以置信的简单,随时都可以拉取下来并且不用修改。

- **模块化和可扩展:**Docker 可以轻松地将应用程序的功能拆分到独立的容器中运行。例如,你的 Postgres 数据库可能在一个容器中运行,而 Redis 服务器在另一个容器中运行,而 Node.js 应用程序在另一个容器中。使用 Docker,将这些容器链接在一起以创建应用程序变得更加容易,将来轻松扩展或更新组件。

况且,谁不喜欢 Docker 鲸?;)

A Beginner-Friendly Introduction to Containers, VMs and Docker

If you’re a programmer or techie, chances are you’ve at least heard of Docker: a helpful tool for packing, shipping, and running applications within “containers.” It’d be hard not to, with all the attention it’s getting these days — from developers and system admins alike. Even the big dogs like Google, VMware and Amazon are building services to support it.

Regardless of whether or not you have an immediate use-case in mind for Docker, I still think it’s important to understand some of the fundamental concepts around what a “container” is and how it compares to a Virtual Machine (VM). While the Internet is full of excellent usage guides for Docker, I couldn’t find many beginner-friendly conceptual guides, particularly on what a container is made up of. So, hopefully, this post will solve that problem :)

Let’s start by understanding what VMs and containers even are.

What are “containers” and “VMs”?

Containers and VMs are similar in their goals: to isolate an application and its dependencies into a self-contained unit that can run anywhere.

Moreover, containers and VMs remove the need for physical hardware, allowing for more efficient use of computing resources, both in terms of energy consumption and cost effectiveness.

The main difference between containers and VMs is in their architectural approach. Let’s take a closer look.

Virtual Machines

A VM is essentially an emulation of a real computer that executes programs like a real computer. VMs run on top of a physical machine using a “hypervisor”. A hypervisor, in turn, runs on either a host machine or on “bare-metal”.

Let’s unpack the jargon:

A hypervisor is a piece of software, firmware, or hardware that VMs run on top of. The hypervisors themselves run on physical computers, referred to as the “host machine”. The host machine provides the VMs with resources, including RAM and CPU. These resources are divided between VMs and can be distributed as you see fit. So if one VM is running a more resource heavy application, you might allocate more resources to that one than the other VMs running on the same host machine.

The VM that is running on the host machine (again, using a hypervisor) is also often called a “guest machine.” This guest machine contains both the application and whatever it needs to run that application (e.g. system binaries and libraries). It also carries an entire virtualized hardware stack of its own, including virtualized network adapters, storage, and CPU — which means it also has its own full-fledged guest operating system. From the inside, the guest machine behaves as its own unit with its own dedicated resources. From the outside, we know that it’s a VM — sharing resources provided by the host machine.

As mentioned above, a guest machine can run on either a hosted hypervisor or a bare-metal hypervisor. There are some important differences between them.

First off, a hosted virtualization hypervisor runs on the operating system of the host machine. For example, a computer running OSX can have a VM (e.g. VirtualBox or VMware Workstation 8) installed on top of that OS. The VM doesn’t have direct access to hardware, so it has to go through the host operating system (in our case, the Mac’s OSX).

The benefit of a hosted hypervisor is that the underlying hardware is less important. The host’s operating system is responsible for the hardware drivers instead of the hypervisor itself, and is therefore considered to have more “hardware compatibility.” On the other hand, this additional layer in between the hardware and the hypervisor creates more resource overhead, which lowers the performance of the VM.

A bare metal hypervisor environment tackles the performance issue by installing on and running from the host machine’s hardware. Because it interfaces directly with the underlying hardware, it doesn’t need a host operating system to run on. In this case, the first thing installed on a host machine’s server as the operating system will be the hypervisor. Unlike the hosted hypervisor, a bare-metal hypervisor has its own device drivers and interacts with each component directly for any I/O, processing, or OS-specific tasks. This results in better performance, scalability, and stability. The tradeoff here is that hardware compatibility is limited because the hypervisor can only have so many device drivers built into it.

After all this talk about hypervisors, you might be wondering why we need this additional “hypervisor” layer in between the VM and the host machine at all.

Well, since the VM has a virtual operating system of its own, the hypervisor plays an essential role in providing the VMs with a platform to manage and execute this guest operating system. It allows for host computers to share their resources amongst the virtual machines that are running as guests on top of them.

As you can see in the diagram, VMs package up the virtual hardware, a kernel (i.e. OS) and user space for each new VM.

Container

Unlike a VM which provides hardware virtualization, a container provides operating-system-level virtualization by abstracting the “user space”. You’ll see what I mean as we unpack the term container.

For all intent and purposes, containers look like a VM. For example, they have private space for processing, can execute commands as root, have a private network interface and IP address, allow custom routes and iptable rules, can mount file systems, and etc.

The one big difference between containers and VMs is that containers share the host system’s kernel with other containers.

This diagram shows you that containers package up just the user space, and not the kernel or virtual hardware like a VM does. Each container gets its own isolated user space to allow multiple containers to run on a single host machine. We can see that all the operating system level architecture is being shared across containers. The only parts that are created from scratch are the bins and libs. This is what makes containers so lightweight.

Where does Docker come in?

Docker is an open-source project based on Linux containers. It uses Linux Kernel features like namespaces and control groups to create containers on top of an operating system.

Containers are far from new; Google has been using their own container technology for years. Others Linux container technologies include Solaris Zones, BSD jails, and LXC, which have been around for many years.

So why is Docker all of a sudden gaining steam?

- Ease of use: Docker has made it much easier for anyone — developers, systems admins, architects and others — to take advantage of containers in order to quickly build and test portable applications. It allows anyone to package an application on their laptop, which in turn can run unmodified on any public cloud, private cloud, or even bare metal. The mantra is: “build once, run anywhere.”

- Speed: Docker containers are very lightweight and fast. Since containers are just sandboxed environments running on the kernel, they take up fewer resources. You can create and run a Docker container in seconds, compared to VMs which might take longer because they have to boot up a full virtual operating system every time.

- Docker Hub: Docker users also benefit from the increasingly rich ecosystem of Docker Hub, which you can think of as an “app store for Docker images.” Docker Hub has tens of thousands of public images created by the community that are readily available for use. It’s incredibly easy to search for images that meet your needs, ready to pull down and use with little-to-no modification.

- Modularity and Scalability: Docker makes it easy to break out your application’s functionality into individual containers. For example, you might have your Postgres database running in one container and your Redis server in another while your Node.js app is in another. With Docker, it’s become easier to link these containers together to create your application, making it easy to scale or update components independently in the future.

Last but not least, who doesn’t love the Docker whale? ;)